Blog Detail

In Part 1, we discussed why you should get started with Git as a Network Engineer and how to make your first commit. In Part 2, we will discuss how to get started with a Git server. In our example, to get started, we will utilize GitHub, as it is a free option. Most all of the same concepts apply to other Git servers as well, like GitLab, Gitea, etc. Keep in mind, though, that GitHub repositories by default are public, so anyone can view them on the internet. So be extremely careful what you put on them. Never store sensitive information in a public repository on any Git server systems. And even avoid saving it in private repositories whenever possible, as these systems are exposed to the internet. And there are vulnerabilities every day that could potentially be exploited to steal sensitive information. With that out of the way, on to using GitHub.

GitHub Account

For this blog we will assume you have an active GitHub account. If not, you will need to sign up for one. Once you are logged into GitHub, we will create a new repository. Make sure to enable multi-factor authentication (MFA) to make your account more resistant to hacking.

Create a Repository

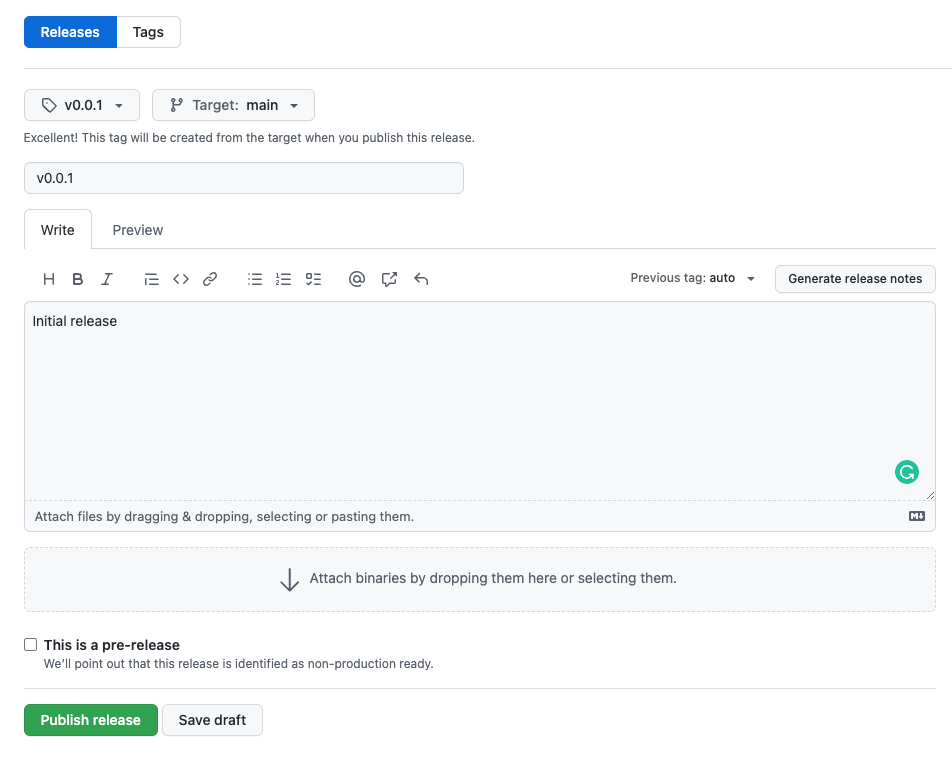

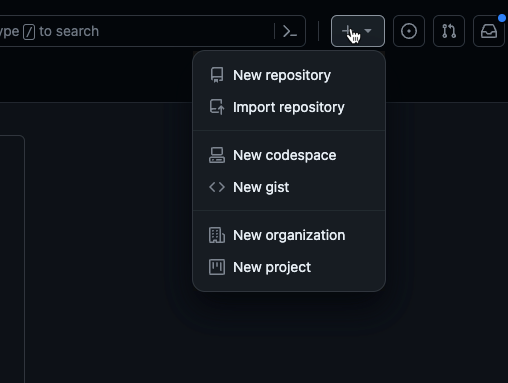

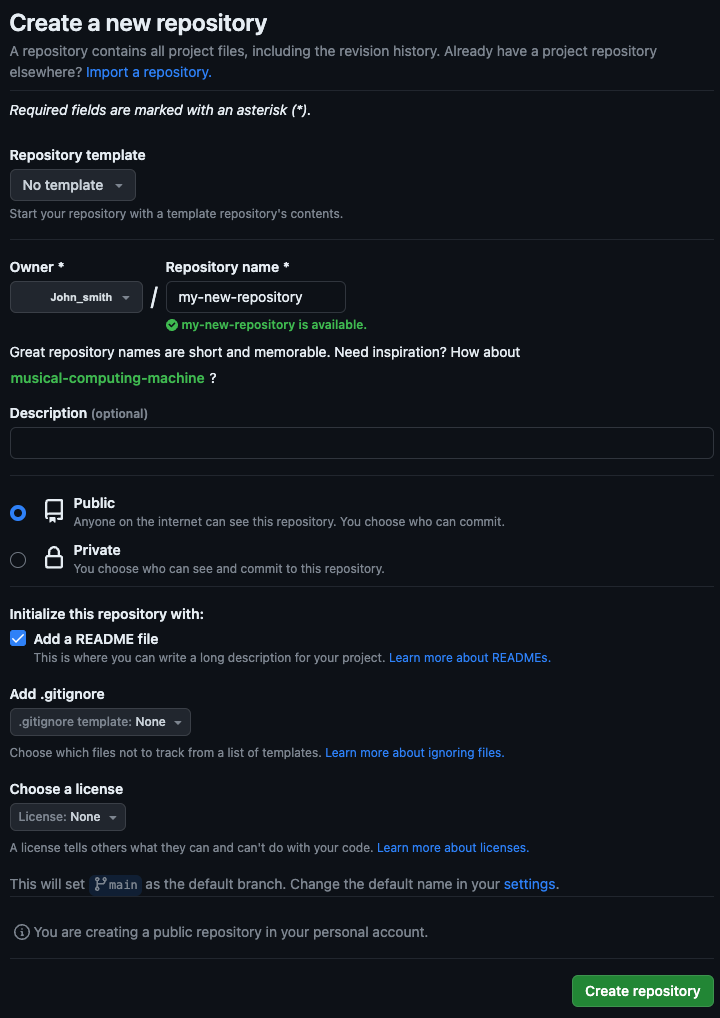

Select the plus sign near the top of the page and select new repository. GitHub will then prompt you for a repository name, description, etc.

The repository name needs to be unique; description is optional. Select whether you’d like the repository to be public or private. If you were creating a brand-new repository for a project that hasn’t been started yet, it is simplest to have GitHub create at least the README.md file so you can clone the repo right away. In our case, since we have an existing Git repository on our local machine, we will not create a README.md, gitignore, or license file. Click on create repository.

When you don’t create the initial files using the repository create process, GitHub will provide you a couple of options to get existing code into the repository. We will use the second option, since we already started the Git repo locally. You will notice the url contains your username and the repository name. These are standard when working with GitHub, making it easy to find projects in a determinate way.

Create a New Local Repository and Link to GitHub

Back on your local system, we will create a README.md file by running the echo command with some text and use >> to pipe that into the README file. Then, we will initialize our working directory as a git repository using the git init command, and stage the changes we made to the README.md file. Then commit those changes to the Git history with the git commit command. Normally you would not need to use the -M parameter with the git branch command, but since the CLI git command sets the default branch to the name master, and GitHub sets the default branch to main unless you change it, that -M parameter forces a rename of the current local branch. We then add a “remote” to our repository, which is just the path to the repository on GitHub (or equivalent Git server). origin is the name of the remote that we created in the git remote add step, and main is the name of the branch we are pushing to. Lastly, we push our changes to the remote using the git push -u origin main (adding the -u parameter tells Git which named remote to use for a specific branch when you push or pull the repository).

echo "# blog" >> README.md

git init

git add README.md

git commit -m "first commit"

git branch -M main

git remote add origin https://github.com/zach/blog.git

git push -u origin mainAdd an Existing Repository to GitHub

GitHub will also provide these commands to you if you create an empty repository, and it will be customized to your specific user/repository.

git remote add origin https://github.com/zach/blog.git

git branch -M main

git push -u origin main

Enumerating objects: 9, done.

Counting objects: 100% (9/9), done.

Delta compression using up to 10 threads

Compressing objects: 100% (4/4), done.

Writing objects: 100% (9/9), 2.97 KiB | 1012.00 KiB/s, done.

Total 9 (delta 2), reused 0 (delta 0), pack-reused 0

remote: Resolving deltas: 100% (2/2), done.

To https://github.com/zach/blog.git

* [new branch] main -> main

branch 'main' set up to track 'origin/main'.We now have the concept of a remote. This is the idea of a link to a remote Git server which hosts our code/files. You can actually connect a repository to multiple remotes to push code to multiple places, but that is beyond the scope of this blog. origin is the name of the remote and is just a standard convention for the main remote for a repository. This can be any name you want, though; you could call it github if that’s easier to remember. If you look back in GitHub now, you should see test.txt and file2.txt in the repository online.

Clone a Repository

Say you want to change computers and need to go back and get your code from GitHub, or you deleted the code from your computer. You would accomplish this through a process called “cloning” the repository. If you visit your repository on GitHub in a web browser, there will be a green button called Code near the top of the screen. Clicking this will open a drop-down menu with some options. You can clone a repository via HTTPS, SSH, or GitHub CLI. For now, we will use HTTPS—this is the easiest way. Using SSH involves setting up SSH keys and is beyond the scope of this document, but as you start working with private repos or would like verified commits, you will want to configure SSH. Cloning also works with public projects or other repositories you have access to. It’s basically just the act of pulling all the code from GitHub (or “Git server”) to your local machine. By default, it clones only the main/master branch.

Make Some Changes

We’ll create a new file in the main branch called file3 and commit that so we have something to work with when we go to push changes. We use the command touch on Linux/Unix systems to create a blank file, then edit the file using vim (you can also use nano/pico/etc). Then, we add ALL changes to be staged for commit by using the git add -A command. You can also use git add ., which stages only new files and modified files, but not deletions. As well as git add -u, which stages modifications and deletions, but not new files. You can also add individual files or directories by specifying those after git add. For example to include just this new file you would run git add file3.txt. The same goes for directories–just list the directory in the command to stage the directory and everything inside it. The most common action is git add -A since you will usually want to commit all your changes at once. We then commit those changes to the Git history using the git commit command, and give it an explanation of what we changed using the -m parameter.

$ touch file3.txt

$ vim file3.txt

$ git add -A

$ git commit -m "add file3.txt"

[main 24c3f53] add file3.txt

1 file changed, 1 insertion(+)

create mode 100644 file3.txtNow we will discuss how to get these changes back to GitHub for permanent storage and sharing.

Private Repositories and GitHub Authentication

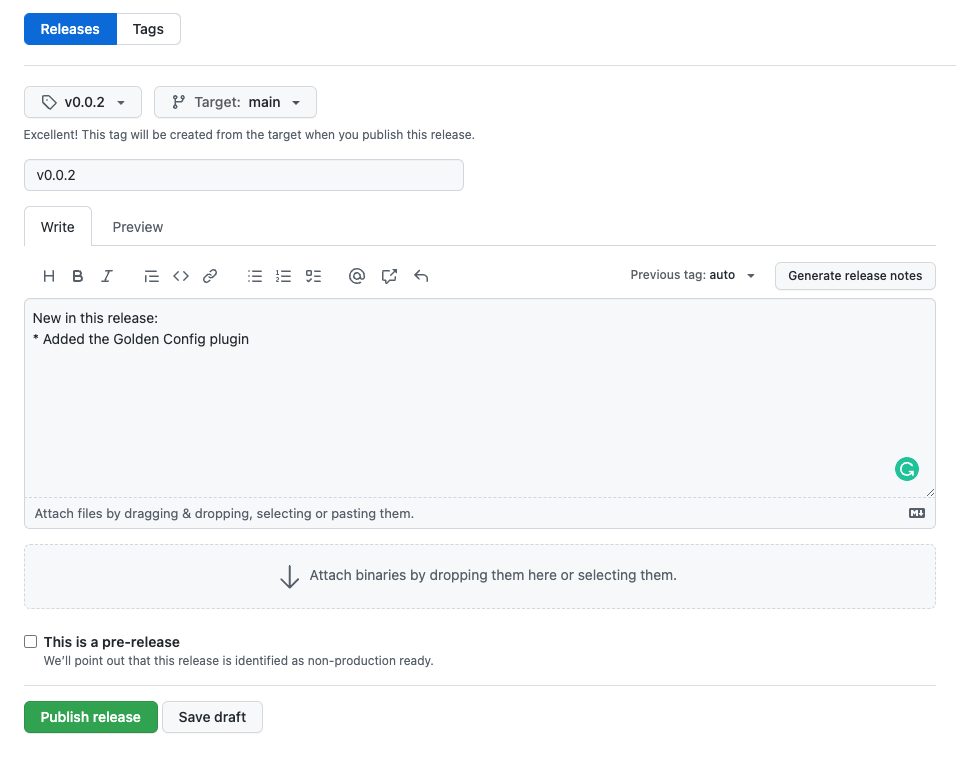

We need to address one thing prior to sending our changes to GitHub. GitHub requires the use of PAT for HTTPS as of August 13, 2021. Here’s more Information. This means that in order to make changes to a repository, you must be authenticated using a Personal Access Token, or SSH Key.

Now we’ll briefly discuss private repositories when working with GitHub. Private repositories should not be treated as 100% secure. Although access to them is restricted by credentialed access, this doesn’t prevent data leakage if the GitHub servers are hacked. There are a couple of different ways to work with private repositories with GitHub. The simplest is to generate a Personal Access Token that will be used to authenticate when cloning/pushing over HTTPS. Go into your GitHub profile, then settings, and scroll down to developer settings. Once there, you can create a token. You will want to adjust the permissions according to what you will need to do with the token. Be sure to always use least privilege access when setting the permissions, and use separate tokens for different services. For example, if you are going to place your repository onto a server that needs only clone/pull access, don’t give that token write access, since it doesn’t need it. Save this token in a password manager.

Once you have your token, you’ll be able to clone private repositories using your username and the token. You can test this by creating a private repository on GitHub and cloning it. When you do the git clone <repository_url>, you will be prompted to enter your GitHub username and the password, which is your Personal Access Token (PAT). With MFA enabled, your password will not work here; but the PAT will.

Push a Repository

Once we have our changes that we want to send up to GitHub, there are a couple of steps involved. Intuitively, Git has a command called push, which is used to upload to a remote Git server (in our case GitHub) the commits we made locally. If we run git push, we will see what happens.

$ git push

Enumerating objects: 4, done.

Counting objects: 100% (4/4), done.

Delta compression using up to 10 threads

Compressing objects: 100% (2/2), done.

Writing objects: 100% (3/3), 1.48 KiB | 1.48 MiB/s, done.

Total 3 (delta 0), reused 0 (delta 0), pack-reused 0

To https://github.com/zach/blog.git

9c643a1..22e3ce9 main -> mainNow our changes are in GitHub, and others can see our changes. You can view these changes by viewing the repository in a web browser. You will also notice that you can see information from the most recent commits and who made changes.

Conclusion

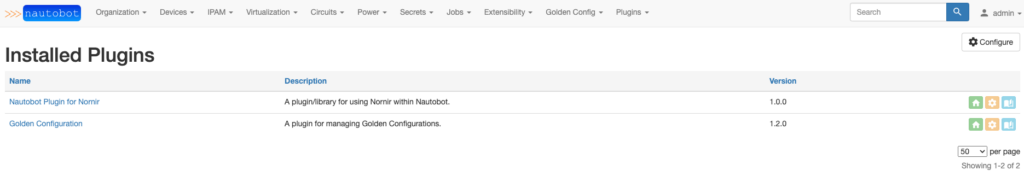

In this part of our blog series, we discussed getting started using GitHub as a Git server, how to clone/push/pull repositories, and how to share your code changes with others. In the next part, we will discuss Git branches and how to do merges and pull requests. See you in the next one!

-Zach

Contact Us to Learn More

Share details about yourself & someone from our team will reach out to you ASAP!