In the previous blog post in the series, I discussed how to use Helm charts to deploy Nautobot to Kubernetes. Deploying Nautobot manually using Helm charts is a great first step. But if we want to move towards the GitOps paradigm, we should go a step further. In this blog post, I will show you how our managed services team manages Nautobot deployments using the GitOps approach.

Introduction to GitOps

What is GitOps? The idea is that the infrastructure is defined declaratively, meaning all infrastructure parameters are defined in Git. Then all infrastructure is deployed from a repository, serving as a single source of truth. Usually, the main branch defines the production infrastructure, but there can also be a version-tagged commit that deploys a specific version of an infrastructure. For every change, the infrastructure developer has to create a branch, push changes and open a pull request. This process allows for peer code reviews and CI testing to catch any problems early on. After the pull request is reviewed, the changes are merged to the main branch. The infrastructure defined in the main branch deploys the production environment automatically.

To deploy an application to Kubernetes, we need to define Kubernetes objects. Kubernetes allows us to define these objects in YAML files. Because infrastructure is defined in files, we can put these files under version control and use the GitOps paradigm to manage infrastructure.

We have YAML files on one side and a Kubernetes cluster on the other. To bridge the gap, we need a tool that automatically detects the changes in a tracked branch and deploys changes to the infrastructure. We could develop a custom script, but instead, we use an existing tool Flux, which is intended to do precisely that. We chose Flux because of its ease of use and because it does the heavy lifting for us.

Flux 101

Flux (or, in short, Flux) is a tool that, among other things, tracks a Git repository to ensure that a Kubernetes cluster’s state matches YAML definitions in a specific tag or branch. Flux will revert any accidental change that does not match the repository. So everything is declaratively described in a Git repository that serves as a source of truth for your infrastructure.

Flux also provides tooling for deploying Helm charts with specified values via additional Kubernetes objects defined with YAML in the same Git repository.

You can find more details on the Flux webpage.

Installing Flux to the Cluster

Before you start with Flux, you must install the Flux CLI tool. The installation is out of scope for this blog post, but it should be easy to install by following the official documentation.

After installing the CLI tool, you must bootstrap a cluster, which is done with the flux bootstrap command. This command installs Flux on a Kubernetes cluster. You need a Git repository to be used by Flux, as Flux pushes its configuration to a specified repository. Also, ensure you have credentials to authenticate to your Git repository.

At this point, I have to warn you. If you have multiple Kubernetes clusters in your Kube configuration file, ensure the current context is correctly set to the cluster you want to configure. You should check the current context with the kubectl config current-context command, otherwise you could break an existing cluster if you override the Flux configuration.

➜ ~ kubectl config current-context

minikube

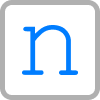

I created an empty repository for this blog post containing only the README.md file.

.

└── nautobot-kubernetes

└── README.md

1 directory, 1 file

I will now go ahead and bootstrap Flux. There are a couple of parameters that you need to pass as inputs to the command:

- URL of the Git repository can be HTTPS or SSH

- Credentials if using HTTPS

- The branch name

- The path in the repository to store Flux Kubernetes objects

<span role="button" tabindex="0" data-code="➜ ~ flux bootstrap git \

–url=https://github.com/networktocode/nautobot-kubernetes \

–username=ubajze \

–password=

➜ ~ flux bootstrap git \

--url=https://github.com/networktocode/nautobot-kubernetes \

--username=ubajze \

--password=<TOKEN> \

--token-auth=true \

--branch=main \

--path=clusters/minikube

► cloning branch "main" from Git repository "https://github.com/networktocode/nautobot-kubernetes"

✔ cloned repository

► generating component manifests

✔ generated component manifests

✔ committed sync manifests to "main" ("61d425fc2aa2ca9ca973cc15c244bb94741cf468")

► pushing component manifests to "https://github.com/networktocode/nautobot-kubernetes"

► installing components in "flux-system" namespace

✔ installed components

✔ reconciled components

► determining if source secret "flux-system/flux-system" exists

► generating source secret

► applying source secret "flux-system/flux-system"

✔ reconciled source secret

► generating sync manifests

✔ generated sync manifests

✔ committed sync manifests to "main" ("bc4b896ac2da2264bf126e357e2b491a8de01644")

► pushing sync manifests to "https://github.com/networktocode/nautobot-kubernetes"

► applying sync manifests

✔ reconciled sync configuration

◎ waiting for Kustomization "flux-system/flux-system" to be reconciled

✔ Kustomization reconciled successfully

► confirming components are healthy

✔ helm-controller: deployment ready

✔ kustomize-controller: deployment ready

✔ notification-controller: deployment ready

✔ source-controller: deployment ready

✔ all components are healthy

After a few minutes, Flux is installed. If you list your Pods, you should see additional pods created in the flux-system namespace.

➜ ~ kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

flux-system helm-controller-7f4bb54ddf-4wxn8 1/1 Running 0 4m47s

flux-system kustomize-controller-5b9955f9c7-lghgq 1/1 Running 0 4m47s

flux-system notification-controller-6c9b987cd8-z6w5s 1/1 Running 0 4m47s

flux-system source-controller-656d7789f7-62gbm 1/1 Running 0 4m47s

kube-system coredns-558bd4d5db-lz8wd 1/1 Running 0 3d6h

kube-system etcd-minikube 1/1 Running 0 3d6h

kube-system kube-apiserver-minikube 1/1 Running 0 3d6h

kube-system kube-controller-manager-minikube 1/1 Running 2 3d6h

kube-system kube-proxy-sq54f 1/1 Running 0 3d6h

kube-system kube-scheduler-minikube 1/1 Running 0 3d6h

kube-system storage-provisioner 1/1 Running 1 3d6h

Also, the repository now contains additional files to define the Flux configuration in the cluster.

.

└── nautobot-kubernetes

├── README.md

└── clusters

└── minikube

└── flux-system

├── gotk-components.yaml

├── gotk-sync.yaml

└── kustomization.yaml

4 directories, 4 files

Flux also installs some Custom Resource Definitions (CRDs) used for various purposes, as you will see later in this post. One of the definitions is called GitRepository used to define a Git repository that Flux will track. Flux defines one GitRepository object for itself, and you can create more GitRepostiory objects for other applications.

➜ ~ kubectl get GitRepository -A

NAMESPACE NAME URL AGE READY STATUS

flux-system flux-system https://github.com/networktocode/nautobot-kubernetes 8m26s True stored artifact for revision 'main/bc4b896ac2da2264bf126e357e2b491a8de01644'

<span role="button" tabindex="0" data-code="➜ ~ kubectl get GitRepository -n flux-system flux-system -o yaml

apiVersion: source.toolkit.fluxcd.io/v1beta2

kind: GitRepository

metadata:

creationTimestamp: "2022-09-09T13:24:52Z"

finalizers:

– finalizers.fluxcd.io

generation: 1

labels:

kustomize.toolkit.fluxcd.io/name: flux-system

kustomize.toolkit.fluxcd.io/namespace: flux-system

name: flux-system

namespace: flux-system

resourceVersion: "67765"

uid: f7882fdf-0bfb-4825-92ad-780619d2d790

spec:

gitImplementation: go-git

interval: 1m0s

ref:

branch: main

secretRef:

name: flux-system

timeout: 60s

url: https://github.com/networktocode/nautobot-kubernetes

➜ ~ kubectl get GitRepository -n flux-system flux-system -o yaml

apiVersion: source.toolkit.fluxcd.io/v1beta2

kind: GitRepository

metadata:

creationTimestamp: "2022-09-09T13:24:52Z"

finalizers:

- finalizers.fluxcd.io

generation: 1

labels:

kustomize.toolkit.fluxcd.io/name: flux-system

kustomize.toolkit.fluxcd.io/namespace: flux-system

name: flux-system

namespace: flux-system

resourceVersion: "67765"

uid: f7882fdf-0bfb-4825-92ad-780619d2d790

spec:

gitImplementation: go-git

interval: 1m0s

ref:

branch: main

secretRef:

name: flux-system

timeout: 60s

url: https://github.com/networktocode/nautobot-kubernetes

<... Output omitted ...>

You can see that the spec section contains the URL, branch, and a couple of other parameters. Flux will track the repository https://github.com/networktocode/nautobot-kubernetes, and it will use the main branch, in my case. Of course, you can set up a different branch if you need to.

Your repository can contain more than just Kubernetes objects. You can have application code, tests, CI/CD definitions, etc. So, you have to tell Flux which files are for Kubernetes deployments in your repository. To do that, Flux introduces the CRD called Kustomization. This CRD is used to bridge the gap between the Git repository and the path in your repository. Apart from that, you can also specify an interval for reconciliation and some other parameters. A Flux Kustomization may point to a directory of Kubernetes objects or a directory with a Kubernetes kustomization kustomization.yaml in it. Unfortunately, the term kustomization is overloaded here and can be confusing, more on this later.

There is one Kustomization object for Flux in the Git repository.

➜ ~ kubectl get kustomization -n flux-system flux-system

NAME AGE READY STATUS

flux-system 36m True Applied revision: main/bc4b896ac2da2264bf126e357e2b491a8de01644

<span role="button" tabindex="0" data-code="➜ ~ kubectl get kustomization -n flux-system flux-system -o yaml

apiVersion: kustomize.toolkit.fluxcd.io/v1beta2

kind: Kustomization

metadata:

creationTimestamp: "2022-09-09T13:24:52Z"

finalizers:

– finalizers.fluxcd.io

generation: 1

labels:

kustomize.toolkit.fluxcd.io/name: flux-system

kustomize.toolkit.fluxcd.io/namespace: flux-system

name: flux-system

namespace: flux-system

resourceVersion: "71920"

uid: 764d3ceb-8512-47fa-a9c9-32434fa3587c

spec:

force: false

interval: 10m0s

path: ./clusters/minikube

prune: true

sourceRef:

kind: GitRepository

name: flux-system

➜ ~ kubectl get kustomization -n flux-system flux-system -o yaml

apiVersion: kustomize.toolkit.fluxcd.io/v1beta2

kind: Kustomization

metadata:

creationTimestamp: "2022-09-09T13:24:52Z"

finalizers:

- finalizers.fluxcd.io

generation: 1

labels:

kustomize.toolkit.fluxcd.io/name: flux-system

kustomize.toolkit.fluxcd.io/namespace: flux-system

name: flux-system

namespace: flux-system

resourceVersion: "71920"

uid: 764d3ceb-8512-47fa-a9c9-32434fa3587c

spec:

force: false

interval: 10m0s

path: ./clusters/minikube

prune: true

sourceRef:

kind: GitRepository

name: flux-system

<... Output omitted ...>

You can see from the output that there is a sourceRef in the spec section, and this section defines the GitRepository object tracked. The path in the repository is defined with the path parameter. Flux will check the ./clusters/minikube directory in the Git repository for changes every 10 minutes (as defined with the interval parameter). All changes detected will be applied to a Kubernetes cluster.

At this point, I have Flux up and running.

Set Up Git Repository

Now that I have explained the basics, I can show you how to deploy Nautobot using Flux. I will create a new directory called ./kubernetes in my Git repository, where I will store files required for Nautobot deployment.

.

└── nautobot-kubernetes

├── README.md

├── clusters

│ └── minikube

│ └── flux-system

│ ├── gotk-components.yaml

│ ├── gotk-sync.yaml

│ └── kustomization.yaml

└── kubernetes

I have a directory, but how will Flux know where to look for Kubernetes objects? Remember, I already have the GitRepository object, which is used to sync data from Git to Kubernetes. And I can use the Kustomization object to map the GitRepository object to the path in that repository.

So let’s create the Kustomization object in the ./cluster/minikube/flux-system directory. I will call it nautobot-kustomization.yaml.

.

└── nautobot-kubernetes

├── README.md

├── clusters

│ └── minikube

│ └── flux-system

│ ├── gotk-components.yaml

│ ├── gotk-sync.yaml

│ ├── kustomization.yaml

│ └── nautobot-kustomization.yaml

└── kubernetes

5 directories, 5 files

---

apiVersion: kustomize.toolkit.fluxcd.io/v1beta2

kind: Kustomization

metadata:

name: nautobot-kustomization

namespace: flux-system

spec:

force: false

interval: 1m0s

path: ./kubernetes

prune: true

sourceRef:

kind: GitRepository

name: flux-system

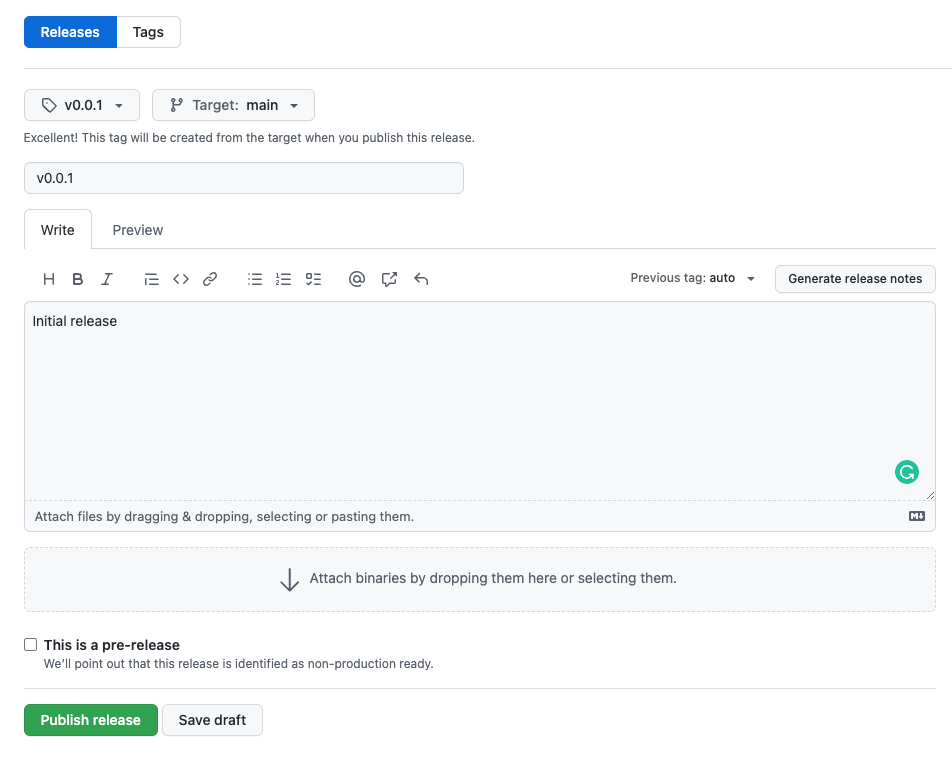

This object tells Flux to track changes in the GitRepository under the ./kubernetes directory. In my case, I will reuse the GitRepository object flux-system, but usually you would create another GitRepository object just for this purpose. You would probably ask me, what is the point of doing that? You get flexibility. Suppose you want to test deployment from a different branch. In that case, you can change the branch you track with the GitRepository, and Flux will automatically deploy Nautobot from a different branch.

At this point, I have to introduce another CRD called Kustomization. Wait, what? You already told us about Kustomization. Unfortunately, this is a different CRD with the same name. If you look carefully at the apiVersion, you can see that the value is different. This one uses kustomize.config.k8s.io/v1beta1, while the “first” Kustomization uses kustomize.toolkit.fluxcd.io/v1beta2.

You can read more about the Kustomization definition in the official documentation. It can be used to compose a collection of resources or generate resources from other sources, such as ConfigMap objects from files.

Take a look at the repository carefully. There is already one kustomization.yaml file in the ./clusters/minikube/flux-system. It contains the following:

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- gotk-components.yaml

- gotk-sync.yaml

You can see a list of resources deployed for this Kustomization. There are currently two files included in the deployment. So, I will add another file called nautobot-kustomization.yaml under the resources object.

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- gotk-components.yaml

- gotk-sync.yaml

- nautobot-kustomization.yaml

I can commit and push changes. After a couple of minutes, the Flux Kustomization object is applied:

➜ ~ kubectl get kustomization -n flux-system

NAME AGE READY STATUS

flux-system 2d21h True Applied revision: main/561d36ff58a7a0fa647e3206faf5ba2aa1cab149

nautobot-kustomization 4m48s False kustomization path not found: stat /tmp/kustomization-2081943599/kubernetes: no such file or directory

You can see that I have a new Kustomization object. Of course, because the directory is empty it is not included in the Git repository. So I have to add some files to make the deployment successful.

I will first create the ./kubernetes/namespace.yaml file, where I will define the Namespace object.

---

apiVersion: v1

kind: Namespace

metadata:

name: nautobot

Next, I will create the ./kubernetes/kustomization.yaml file, where I will specify Nautobot resources. The first resource will be the namespace.yaml.

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: nautobot

resources:

- namespace.yaml

Right now, my directory structure looks like this:

.

└── nautobot-kubernetes

├── README.md

├── clusters

│ └── minikube

│ └── flux-system

│ ├── gotk-components.yaml

│ ├── gotk-sync.yaml

│ ├── kustomization.yaml

│ └── nautobot-kustomization.yaml

└── kubernetes

├── kustomization.yaml

└── namespace.yaml

5 directories, 7 files

Let’s commit and push the changes and wait for Flux to sync the Git repository in a few minutes. After the Git repository is synced I can check the status of Kustomizations.

➜ ~ kubectl get kustomization -n flux-system

NAME AGE READY STATUS

flux-system 2d22h True Applied revision: main/2208cac47b74342c7b86120fb55fe34f47c87b7b

nautobot-kustomization 17m True Applied revision: main/2208cac47b74342c7b86120fb55fe34f47c87b7b

You can see that the Nautobot Kustomization has been successfully applied. I can see a namespace called nautobot, meaning the Namespace object was installed successfully.

➜ ~ kubectl get namespace nautobot

NAME STATUS AGE

nautobot Active 113s

Introduction to HelmRelease

In the previous blog post, I showed how to deploy Nautobot using Helm charts, which is a nice approach if you want to simplify Nautobot deployment to Kubernetes. I explained how to pass the input parameters (inline or from a YAML file) to the helm command. This is a simple way to deploy Nautobot manually. However, as we want to follow GitOps principles, we want to deploy everything automatically from a Git repository.

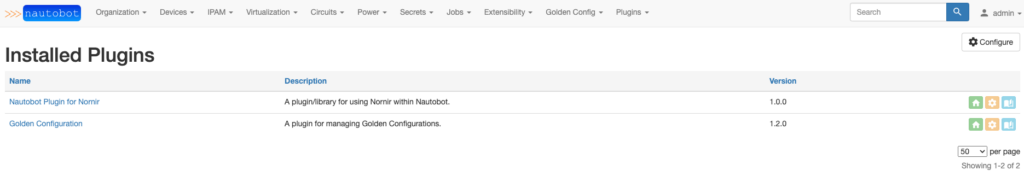

For this purpose, Flux has a special CRD called HelmRelease. If you take a look at the list of Pods installed by Flux, you can notice that there is a Pod called helm-controller. This Pod looks for any HelmRelease objects deployed to Kubernetes, and if there is one, it installs Helm charts. In principle, the helm-controller runs the helm install command.

If you remember from my previous blog post, I needed a Helm repository containing Helm charts for a particular application. In case you manually add a repository with the helm command, the repository is installed on your local machine. Using the helm install command deploys a Kubernetes object to a cluster. For Flux, we need to specify a repository for the Helm controller. That is why we have another CRD called HelmRepository.

Deploy Using HelmRelease

Now that you are familiar with HelmReleases, I can start adding Kubernetes objects to the ./kubernetes directory.

I will first define the Nautobot HelmRepository. I will create a file called ./kubernetes/nautobot-helmrepo.yaml with the following content:

---

apiVersion: "source.toolkit.fluxcd.io/v1beta2"

kind: "HelmRepository"

metadata:

name: "nautobot"

namespace: "nautobot"

spec:

url: "https://nautobot.github.io/helm-charts/"

interval: "10m"

I must update resources in the ./kubernetes/kustomization.yaml file to apply this file.

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: nautobot

resources:

- namespace.yaml

- nautobot-helmrepo.yaml

A few minutes after pushing the changes, I can see that the HelmRepository object was created.

➜ ~ kubectl get helmrepository -A

NAMESPACE NAME URL AGE READY STATUS

nautobot nautobot https://nautobot.github.io/helm-charts/ 9s True stored artifact for revision 'efa67ddff2b22097e642cc39918b7f7a27c53042e19ba19466693711fe7dd80e'

To deploy Nautobot using Helm charts, I need two things:

- A

ConfigMap containing values for Helm charts

- A

HelmRelease object connecting values and Helm charts

I will first create a ConfigMap object. The easiest way is to create a file with values in YAML format. The same format is used if you deploy Helm charts manually, using the --values option. I will go ahead and create a file called ./kubernetes/values.yaml with the following content:

---

postgresql:

postgresqlPassword: "SuperSecret123"

redis:

auth:

password: "SuperSecret456"

The Kustomization object (kustomize.config.k8s.io/v1beta1) supports the ConfigMap generator, meaning you can generate a ConfigMap from a file directly. So I will update the ./kubernetes/kustomization.yaml file to generate a ConfigMap.

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: nautobot

resources:

- namespace.yaml

- nautobot-helmrepo.yaml

generatorOptions:

disableNameSuffixHash: true

configMapGenerator:

- name: "nautobot-values"

files:

- "values=values.yaml"

The configMapGenerator will create a ConfigMap with the name nautobot-values and the key called values containing the content of the file values.yaml. I will go ahead and push the changes.

After a few minutes, you can see the new ConfigMap created:

➜ ~ kubectl get configmaps -n nautobot nautobot-values -o yaml

apiVersion: v1

data:

values: |

---

postgresql:

postgresqlPassword: "SuperSecret123"

redis:

auth:

password: "SuperSecret456"

kind: ConfigMap

metadata:

creationTimestamp: "2022-09-12T12:02:29Z"

labels:

kustomize.toolkit.fluxcd.io/name: nautobot-kustomization

kustomize.toolkit.fluxcd.io/namespace: flux-system

name: nautobot-values

namespace: nautobot

resourceVersion: "107230"

uid: 7cbcd132-faca-4b09-8af5-f2f68feb8dbb

Now that the ConfigMap is created, I can finally create a HelmRelease object. I will put the following content into a file ./kubernetes/helmrelease.yaml:

---

apiVersion: "helm.toolkit.fluxcd.io/v2beta1"

kind: "HelmRelease"

metadata:

name: "nautobot"

spec:

interval: "30s"

chart:

spec:

chart: "nautobot"

version: "1.3.12"

sourceRef:

kind: "HelmRepository"

name: "nautobot"

namespace: "nautobot"

interval: "20s"

valuesFrom:

- kind: "ConfigMap"

name: "nautobot-values"

valuesKey: "values"

This Kubernetes object defines the chart that will be installed. The sourceRef specifies the HelmRepository used for this HelmRelease. The valuesFrom section describes where to take values from.

I must add this file to resources in the ./kubernetes/kustomization.yaml.

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: nautobot

resources:

- namespace.yaml

- nautobot-helmrepo.yaml

- helmrelease.yaml

generatorOptions:

disableNameSuffixHash: true

configMapGenerator:

- name: "nautobot-values"

files:

- "values=values.yaml"

This is how my repo structure looks at the end:

.

└── nautobot-kubernetes

├── README.md

├── clusters

│ └── minikube

│ └── flux-system

│ ├── gotk-components.yaml

│ ├── gotk-sync.yaml

│ ├── kustomization.yaml

│ └── nautobot-kustomization.yaml

└── kubernetes

├── helmrelease.yaml

├── kustomization.yaml

├── namespace.yaml

├── nautobot-helmrepo.yaml

└── values.yaml

5 directories, 10 files

I can now commit and push all changes. A few minutes after the push, you can see the HelmRelease object created in the nautobot namespace.

➜ ~ kubectl get helmreleases -n nautobot

NAME AGE READY STATUS

nautobot 81s Unknown Reconciliation in progress

After a couple more minutes, I can also see Nautobot pods up and running.

➜ ~ kubectl get pods -n nautobot

NAME READY STATUS RESTARTS AGE

nautobot-577c89f9c7-fzs2s 1/1 Running 1 2m28s

nautobot-577c89f9c7-hczbx 1/1 Running 1 2m28s

nautobot-celery-beat-7d9f864c58-2c9r7 1/1 Running 3 2m28s

nautobot-celery-worker-647cc6d8dd-npx42 1/1 Running 2 2m28s

nautobot-celery-worker-647cc6d8dd-plmjq 1/1 Running 2 2m28s

nautobot-postgresql-0 1/1 Running 0 2m28s

nautobot-redis-master-0 1/1 Running 0 2m28s

So, what’s nice about this approach is that when changes are merged (or pushed) to the main branch, everything is deployed automatically.

Let’s say I want to scale up the Nautobot Celery workers to four. I can update the default value in the ./kubernetes/values.yaml to four:

---

postgresql:

postgresqlPassword: "SuperSecret123"

redis:

auth:

password: "SuperSecret456"

celeryWorker:

replicaCount: 4

I can commit and push the changes, and after the Git repository is synced, changes are applied automatically. In the real GitOps paradigm, you would probably create a new branch, add changes, push changes, and create a Pull Request. After a colleague reviews changes and changes are merged, these are automatically applied to a production environment. In my case, I will push changes to the main branch directly.

Waiting a few minutes for the Git repository to sync, I can now see four worker replicas:

➜ ~ kubectl get pods -n nautobot

NAME READY STATUS RESTARTS AGE

nautobot-577c89f9c7-fzs2s 1/1 Running 1 14m

nautobot-577c89f9c7-hczbx 1/1 Running 1 14m

nautobot-celery-beat-7d9f864c58-2c9r7 1/1 Running 3 14m

nautobot-celery-worker-647cc6d8dd-564p6 1/1 Running 0 2m58s

nautobot-celery-worker-647cc6d8dd-7xwtb 1/1 Running 0 2m58s

nautobot-celery-worker-647cc6d8dd-npx42 1/1 Running 2 14m

nautobot-celery-worker-647cc6d8dd-plmjq 1/1 Running 2 14m

nautobot-postgresql-0 1/1 Running 0 14m

nautobot-redis-master-0 1/1 Running 0 14m