Have you seen or taken the 2020 NetDevOps survey? If not, don’t worry, it is held yearly from Sept 30th to Oct 31st, so you will get your chance. To quickly recap, the survey’s intention is to understand which tools are most commonly used by Network Operators and Engineers to automate their day-to-day jobs. It is a very interesting survey, and I encourage you to read it.

If we take a look at this data, particularly the questions about tools used in automation, we will see that Ansible is the most popular one. At the same time, there are other tools that are used, but not so much—like SaltStack (aka Salt). If I would speculate why SaltStack is not so popular among Network Engineers, I would say that’s because Salt is more friendly with managing the servers and not so much the networking devices. So I thought, if I write a blog about Salt with references to Ansible it might encourage engineers to learn this tool a bit more.

NOTE

I will do my best to address situations where features available to Salt do not have direct counterparts in Ansible.

Before you start using Salt, you need to get familiar with its terminologies. The below table summarizes the ones that I will use in this blog:

| SaltStack Name | Description | Ansible Name | Description |

|---|---|---|---|

| Master | Server that commands-and-controls the minions. | Server | The machine where Ansible is installed. |

| Minion | Managed end-hosts where the commands are executed. | Hosts | The devices that are managed by the Ansible server. |

| Proxy Minion | End-hosts that cannot run the salt-minion service and can be controlled using proxy-minion setup. These hosts are mostly networking or IoT devices. | N/A | N/A |

| Pillars | Information (e.g., username, passwords, configuration parameters, variables, etc.) that needs to be defined and associated with one or more minion(s) in order to interact with or distribute to the end-host. | Inventory and Static variables | The Ansible Inventory and variables that need to be associated with the managed host(s). |

| Grains | Information (e.g., IP address, OS type, memory utilization etc.) that is obtained from the managed minion. Grains also can be statically assigned to the minions. | Fact | Information obtained from the host with the gather_facts or similar task operation. |

| Modules | Commands that are executed locally on the master or on the minion. | Modules | Commands that are executed locally on the server or on the end-host. |

| SLS Files | (S)a(l)t (S)tate files, also known as formulas, are the representation of the state in which the end-hosts should be. SLS files are mainly written in YAML, but other languages are also supported. | Playbook/Tasks | The file where one or more tasks that need to be executed on the end-host are defined. |

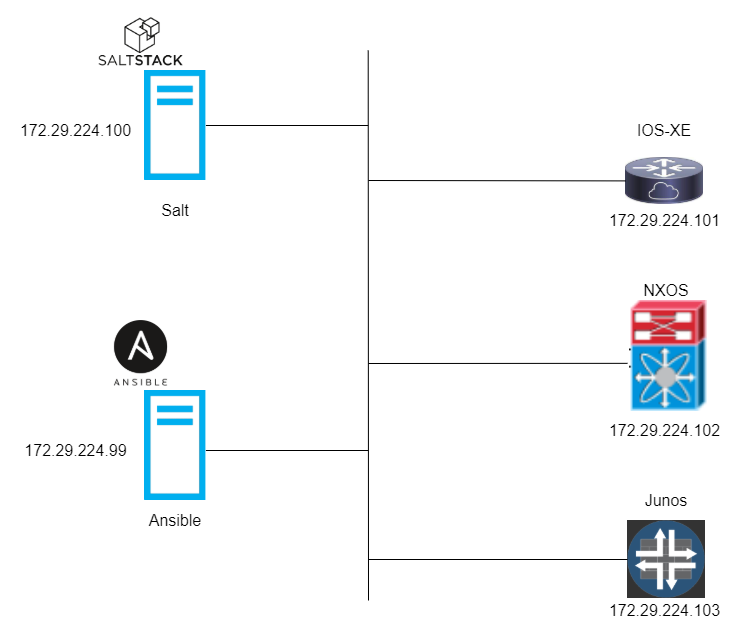

Let’s take a look at my playground. These are the hosts and related information involved in this blog:

Salt

Ansible

IOS-XE

NX-OS

Junos

The Salt installation can vary based on the OS that you are using, so I would suggest that you read the installation instructions that match your OS. At the end, you should have salt-master and salt-minion installed on your machine.

The Ansible installation is a bit more straightforward; use the pip install ansible==2.10.7 command to install the same version that I use.

The time has come to configure the Salt environment. Confirm that salt-master service is active. If not, start it with sudo salt-master -d command.

$ service salt-master status

● salt-master.service - The Salt Master Server

Loaded: loaded (/lib/systemd/system/salt-master.service; enabled; vendor preset: enabled)

Active: active (running) since Tue 2021-07-06 23:29:24 UTC; 7min ago

>>>

Trimmed for brevity

<<<

Using your favorite text editor, open the /etc/salt/master configuration file and append the below text to it:

file_roots: # Allows minions and proxy-minions to access SLS files with salt:// prefix

base: # Name of the environment

- /opt/salt/base/root/ # Full path where state and related files will be stored

pillar_roots: # Instructs master to look for pillar files at the specified location(s)

base: # Name of the environment

- /opt/salt/base/pillar # Full path where pillar files will be stored

Next, configure the proxy file in the /etc/salt/master directory and point it to the Salt master’s IP or FQDN, like so:

NOTE

In my setup, I am running the proxy service on the same machine where thesalt-masteris configured, so I will use thelocalhost.

$ cat /etc/salt/proxy

master: localhost

Save the changes and restart the salt-master service using sudo service salt-master restart command. After the restart, you will need to create necessary folders. For that, use the sudo mkdir -p /opt/salt/base/{root,pillar} command.

$ sudo mkdir -p /opt/salt/base/{root,pillar}

$ ll /opt/salt/base/

total 16

drwxr-xr-x 4 root root 4096 Jul 7 00:17 ./

drwxr-xr-x 3 root root 4096 Jul 7 00:17 ../

drwxr-xr-x 2 root root 4096 Jul 7 00:17 pillar/

drwxr-xr-x 2 root root 4096 Jul 7 00:17 root/

Great, the salt-master is ready. Now you can configure the pillar files for the proxy-minion service. This will contain instructions on how to communicate with the networking devices. Create junos-pillar.sls file in the /opt/salt/base/pillar/ folder and populate with the following data:

NOTE

Some vendors, such as Juniper and Cisco, have their own proxy types. Cisco currently has proxy module only for NXOS platform. For IOS, you would need to use either netmiko or NAPALM. To avoid complications, we will stick with netmiko for all three platforms.

proxy:

proxytype: netmiko # Proxy type

device_type: juniper_junos # A setting required by the netmiko

ip: 172.29.224.103 # IP address of the host

username: admin

password: admin123

Next, create the nxos-pillar.sls file in the same folder and populate with following data:

proxy:

proxytype: netmiko

device_type: cisco_nxos

ip: 172.29.224.102

username: admin

password: admin123

Finally create the ios-pillar.sls:

proxy:

proxytype: netmiko

device_type: cisco_xe

ip: 172.29.224.101

username: admin

password: admin123

NOTE

You can create other pillar files for the same devices and use differentproxytypes. For Juniper devices you can usejunosproxy and for Cisco NXOS devices you can usenxosornxos_apiproxies.

To compare with Ansible, this is similar to the inventory file where you need to define the ansible_host, ansible_user, ansible_password and ansible_network_os variables.

[base]

IOS-XE ansible_host=172.29.224.101 ansible_user=admin ansible_password=admin123 ansible_network_os=ios ansible_connection=network_cli

NX-OS ansible_host=172.29.224.102 ansible_user=admin ansible_password=admin123 ansible_network_os=nxos ansible_connection=network_cli

Junos ansible_host=172.29.224.103 ansible_user=admin ansible_password=admin123 ansible_network_os=junos ansible_connection=netconf

Okay, let’s take a quick break to understand how these files were constructed. The most important one is the proxy key. Salt will look for this key, and the information within it, to set up the the proxy-minion service. The rest of the key value pairs were defined based on the official documentation that can be found here.

Now you need to create the pillar top.sls file (main file) where each pillar file will be associated with a respective proxy-minion name. The top.sls file should be located in the /opt/salt/base/pillar/ folder and should contain the following data:

base: # The name of the environment

'Junos': # The name of the proxy-minion. Can be any name, but it is a good idea to put the device's hostname.

- junos-pillar # This is the name of the previously created pillar file. Note the `.sls` suffix is not specified.

'NX-OS':

- nxos-pillar

'IOS-XE':

- ios-pillar

It is time to start the proxy-minion service for each host. For that, use the following commands:

NOTE

The value passed to the--proxyidargument should match the name of the proxy-minion defined in thetop.slsfile.

$ sudo salt-proxy --proxyid=Junos -d

$ sudo salt-proxy --proxyid=NX-OS -d

$ sudo salt-proxy --proxyid=IOS-XE -d

Finally, if everything was done properly, you should see three unaccepted keys after you issue the sudo salt-key --list-all command. These are the requests that the proxy services made to register the proxy-minions against the master node.

$ sudo salt-key --list-all

Accepted Keys:

Denied Keys:

Unaccepted Keys:

IOS-XE

Junos

NX-OS

Rejected Keys:

To accept all keys at once, issue sudo salt-key --accept-all command. When prompted press y.

$ sudo salt-key --accept-all

The following keys are going to be accepted:

Unaccepted Keys:

IOS-XE

Junos

NX-OS

Proceed? [n/Y] y

Key for minion IOS-XE accepted.

Key for minion Junos accepted.

Key for minion NX-OS accepted.

You made it to the fun part of the blog. Here you will start executing some ad hoc commands against the networking devices. To start, you will need to install some Python packages on both Salt and Ansible hosts. Using sudo pip install netmiko junos-eznc jxmlease yamlordereddictloader napalm command install the packages.

$ sudo pip install netmiko junos-eznc jxmlease yamlordereddictloader napalm

Collecting napalm

Downloading napalm-3.3.1-py2.py3-none-any.whl (256 kB)

|████████████████████████████████| 256 kB 91 kB/s

Collecting junos-eznc

Downloading junos_eznc-2.6.1-py2.py3-none-any.whl (195 kB)

|████████████████████████████████| 195 kB 113 kB/s

>>>

Trimmed for brevity

<<<

Now you can run sudo salt "*" test.ping command on the salt-master

$ sudo salt "*" test.ping

IOS-XE:

True

Junos:

True

NX-OS:

True

The Ansible counterpart command will be: ansible -i inv.ini base -m ping.

Now let’s take a look at the grains that salt master gathers from the devices. The sudo salt "*" grains.items command will reveal the below information. Similarly, you can do the same thing from the Ansible host using ansible -m ios_facts -i inv.ini IOS-XE, ansible -m nxos_facts -i inv.ini NX-OS, and ansible -m junos_facts -i inv.ini Junos commands.

NOTE

Unfortunately,netmikodoes not pull grain information from the devices, but hopefully in the future this gets fixed.

Junos:

----------

cpuarch:

x86_64

cwd:

/

dns:

----------

domain:

ip4_nameservers:

- 192.168.65.5

ip6_nameservers:

nameservers:

- 192.168.65.5

options:

search:

sortlist:

fqdns:

gpus:

hwaddr_interfaces:

----------

id:

Junos

<<<

TRIMMED

>>>

NX-OS:

----------

cpuarch:

x86_64

cwd:

/

dns:

----------

domain:

ip4_nameservers:

- 192.168.65.5

ip6_nameservers:

nameservers:

- 192.168.65.5

options:

search:

sortlist:

fqdns:

gpus:

hwaddr_interfaces:

----------

id:

NX-OS

<<<

TRIMMED

>>>

IOS-XE:

----------

cpuarch:

x86_64

cwd:

/

dns:

----------

domain:

ip4_nameservers:

- 192.168.65.5

ip6_nameservers:

nameservers:

- 192.168.65.5

options:

search:

sortlist:

fqdns:

gpus:

hwaddr_interfaces:

----------

id:

IOS-XE

<<<

TRIMMED

>>>

Below data were gathered to demonstrate what grains will look like if different proxytypes were used:

$ sudo salt "IOS-XE" napalm_net.facts

IOS-XE:

----------

comment:

out:

----------

fqdn:

IOS-XE.local.host

hostname:

IOS-XE

interface_list:

- GigabitEthernet1

- GigabitEthernet2

- GigabitEthernet3

- GigabitEthernet4

model:

CSR1000V

os_version:

Virtual XE Software (X86_64_LINUX_IOSD-UNIVERSALK9-M), Version 16.12.3, RELEASE SOFTWARE (fc5)

serial_number:

ABC123ABC123

uptime:

5820

vendor:

Cisco

result:

True

$ sudo salt "NX-OS" nxos.grains

NX-OS:

----------

hardware:

----------

Device name:

NX-OS

bootflash:

4287040 kB

plugins:

- Core Plugin

- Ethernet Plugin

software:

----------

BIOS:

version

BIOS compile time:

NXOS compile time:

12/22/2019 2:00:00 [12/22/2019 14:00:37]

NXOS image file is:

bootflash:///nxos.9.3.3.bin

$ sudo salt "Junos" junos.facts

Junos:

----------

facts:

----------

2RE:

False

HOME:

/var/home/admin

RE0:

----------

last_reboot_reason:

Router rebooted after a normal shutdown.

mastership_state:

master

model:

VSRX RE

status:

Testing

up_time:

9 minutes, 20 seconds

RE1:

None

RE_hw_mi:

False

current_re:

- master

- fpc0

- node

- fwdd

- member

- pfem

- re0

- fpc0.pic0

domain:

None

fqdn:

Junos

hostname:

Junos

>>>

Trimmed for brevity

<<<

You have probably noticed that for the example data, I used different modules like junos.facts or nxos.grains. So why can’t we use the vendor-specific modules? This is due to the proxytype assigned to the device. Even though the salt "Junos" junos.facts is a correct command, Salt will check whether it is associated with proxytype: netmiko and, if not, it will report that it is not available for use.

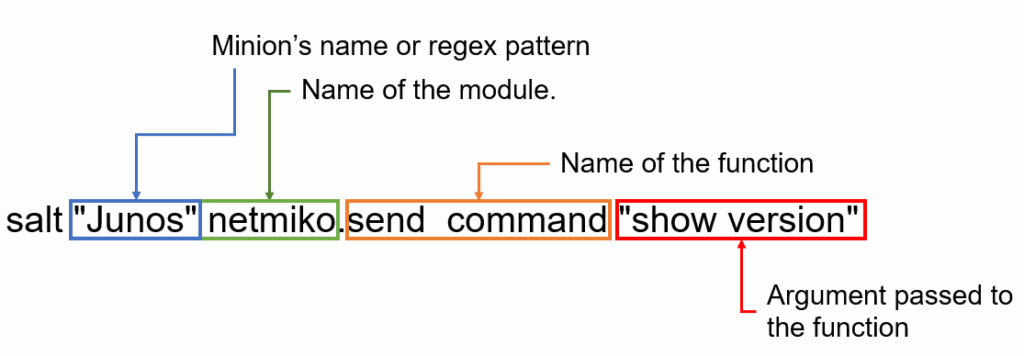

To understand the syntax of the salt shell command, I would refer to the image below. The salt allows for the commands to be executed on remote systems (minions) in parallel. The documentation for all supported salt modules can be found here.

Here are some more commands for you to try:

sudo salt "Junos" netmiko.send_command "show version"

sudo salt --out json "IOS-XE" netmiko.send_command "show ip interface brief" use_textfsm=True

sudo salt "NX-OS" netmiko.send_config config_commands="['hostname NX-OS-SALT']"

Ad hoc commands are very useful for checking or obtaining information from multiple devices, but they are not practical because they can’t act upon that information the way SaltState files (formulas) can. Similarly, the Ansible Playbooks are used.

You can start by creating a new pillar file, which should contain NTP server information. The file should be placed in the /opt/salt/base/pillar/ folder, named as ntp_servers.sls and contain the following data:

ntp_servers:

- 172.29.224.1

Next, the top.sls file needs to be updated with instructions permitting all proxy-minions to use the information contained in the ntp_servers.sls file.

$ cat /opt/salt/base/pillar/top.sls

base: # Environment name

'Junos': # proxy-minion name

- junos-pillar # pillar file

'IOS-XE':

- ios-pillar

'NX-OS':

- nxos-pillar

'*': # All hosts will match.

- ntp_servers

Now you need to refresh the pillar information by using sudo salt "*" saltutil.refresh_pillar command.

IOS-XE:

True

Junos:

True

NX-OS:

True

Confirm that information is available to all three hosts with sudo salt "*" pillar.data or sudo salt "*" pillar.items commands:

$ sudo salt "*" pillar.data

IOS-XE:

----------

ntp_servers:

- 172.29.224.1

proxy:

----------

driver:

ios

host:

172.29.224.101

password:

admin123

provider:

napalm_base

proxytype:

napalm

username:

admin

NX-OS:

----------

ntp_servers:

- 172.29.224.1

proxy:

----------

connection:

ssh

host:

172.29.224.102

key_accept:

True

password:

admin123

prompt_name:

NX-OS

proxytype:

nxos

username:

admin

Junos:

----------

ntp_servers:

- 172.29.224.1

proxy:

----------

host:

172.29.224.103

password:

admin123

port:

830

proxytype:

junos

username:

admin

Next, you need to create a Jinja template for NTP configuration. The IOS-XE and NX-OS devices share the same configuration syntax, so all you need to do is to check the ID value stored in grains and identify the Junos device and, based on that, construct proper configuration syntax for it. Name the file as ntp_config.set and store it in the /opt/salt/base/root/ folder. The contents of the file should look like this:

{% set ntp = pillar['ntp_servers'][0] %}

{% set device_type = grains['id'] %}

{% if device_type == "Junos" %}

set system ntp server {{ ntp }}

{% else %}

ntp server {{ ntp }}

{% endif %}

Finally, you need to create the Salt State file (aka formula) and specify what it should do. The file needs to be located in the /opt/salt/base/root/ folder and named as update_ntp.sls with the following contents:

UPDATE NTP CONFIGURATION: # The ID name of the state. Should be unique if multiple IDs are present in the formula.

module.run: # state function to run module functions

- name: netmiko.send_config # Name of the module function

- config_file: salt://ntp_config.set # Argument that needs to be passed to the module function. The salt:// will convert to `/opt/salt/base/root/` path.

You might ask: “Why did we use module.run to run netmiko.send_config?” That’s a great question! SaltStack separates modules by their purposes, and execution modules cannot be used to maintain a state on the minion. For that, the state modules exist. Since netmiko does not have a state module, the module.run allows you to run execution modules in the Salt State file.

Okay, let’s compare the state file with an Ansible playbook. This is how a playbook that would do the same thing would look:

---

- name: UPDATE NTP CONFIGURATION

hosts: base

gather_facts: no

vars:

pillar:

ntp_servers:

- 172.29.224.1

tasks:

- name: 5 - UPDATE NTP CONFIGURATION FOR JUNOS

junos_config:

src: ntp_config.set

vars:

grains:

id: Junos

when: "ansible_network_os == 'junos'"

- name: 10 - UPDATE NTP CONFIGURATION FOR NX-OS

nxos_config:

src: ntp_config.set

vars:

grains:

id: NX-OS

when: "ansible_network_os == 'nxos'"

- name: 15 - UPDATE NTP CONFIGURATION FOR IOS

ios_config:

src: ntp_config.set

vars:

grains:

id: IOS-XE

when: "ansible_network_os == 'ios'"

The salt "*" state.apply update_ntp command will apply the configuration:

$ salt "*" state.apply update_ntp

IOS:

----------

ID: UPDATE NTP CONFIGURATION

Function: module.run

Name: netmiko.send_config

Result: True

Comment: Module function netmiko.send_config executed

Started: 02:26:17.021615

Duration: 858.925 ms

Changes:

----------

ret:

configure terminal

Enter configuration commands, one per line. End with CNTL/Z.

IOS-XE(config)#ntp server 172.29.224.1

IOS-XE(config)#end

IOS-XE#

Summary for IOS

------------

Succeeded: 1 (changed=1)

Failed: 0

------------

Total states run: 1

Total run time: 858.925 ms

NXOS:

----------

ID: UPDATE NTP CONFIGURATION

Function: module.run

Name: netmiko.send_config

Result: True

Comment: Module function netmiko.send_config executed

Started: 02:26:17.074178

Duration: 5612.827 ms

Changes:

----------

ret:

configure terminal

Enter configuration commands, one per line. End with CNTL/Z.

NX-OS(config)# ntp server 172.29.224.1

NX-OS(config)# end

NX-OS#

Summary for NXOS

------------

Succeeded: 1 (changed=1)

Failed: 0

------------

Total states run: 1

Total run time: 5.613 s

SRX:

----------

ID: UPDATE NTP CONFIGURATION

Function: module.run

Name: netmiko.send_config

Result: True

Comment: Module function netmiko.send_config executed

Started: 02:26:17.083703

Duration: 11961.207 ms

Changes:

----------

ret:

configure

Entering configuration mode

[edit]

admin@Junos# set system ntp server 172.29.224.1

[edit]

admin@Junos# exit configuration-mode

Exiting configuration mode

admin@Junos>

Summary for SRX

------------

Succeeded: 1 (changed=1)

Failed: 0

------------

Total states run: 1

Total run time: 11.961 sSaltStack is a very powerful tool, and covering all of its aspects, such as reactors and syslog engines, here would be impossible. But I hope that the information presented above was enough for you to get started learning it. If you have any questions, feel free to leave a comment below, and I will do my best to answer them!

-Armen

Share details about yourself & someone from our team will reach out to you ASAP!