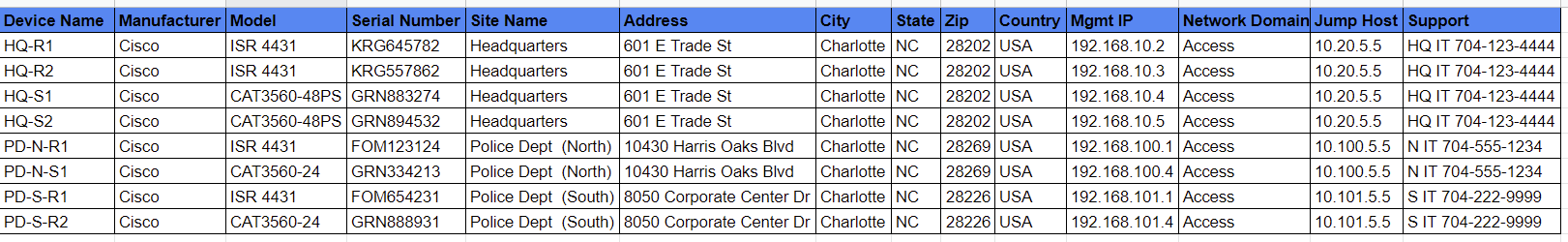

In Part 1 of the Introduction to Structured Data, David Cole explained what structured data is and why it is important. In Part 2, we’ll take a look at interacting with structured data in a programmatic manner.

To keep the concepts digestible, we’ll utilize the examples provided in Part 1 throughout this blog.

CSV

There are a number of libraries that can interact with Excel, but the easiest way to interact with spreadsheets in Python is to convert the spreadsheet to a CSV file.

The first line of the CSV file is the header line. The subsequent lines are the data that we’ll be working with. The data in each column corresponds with its column header line.

The first two lines of the CSV file are represented below:

Device Name,Manufacturer,Model,Serial Number,Site Name,Address,City,State,Zip,Country,Mgmt IP,Network Domain,Jump Host,Support

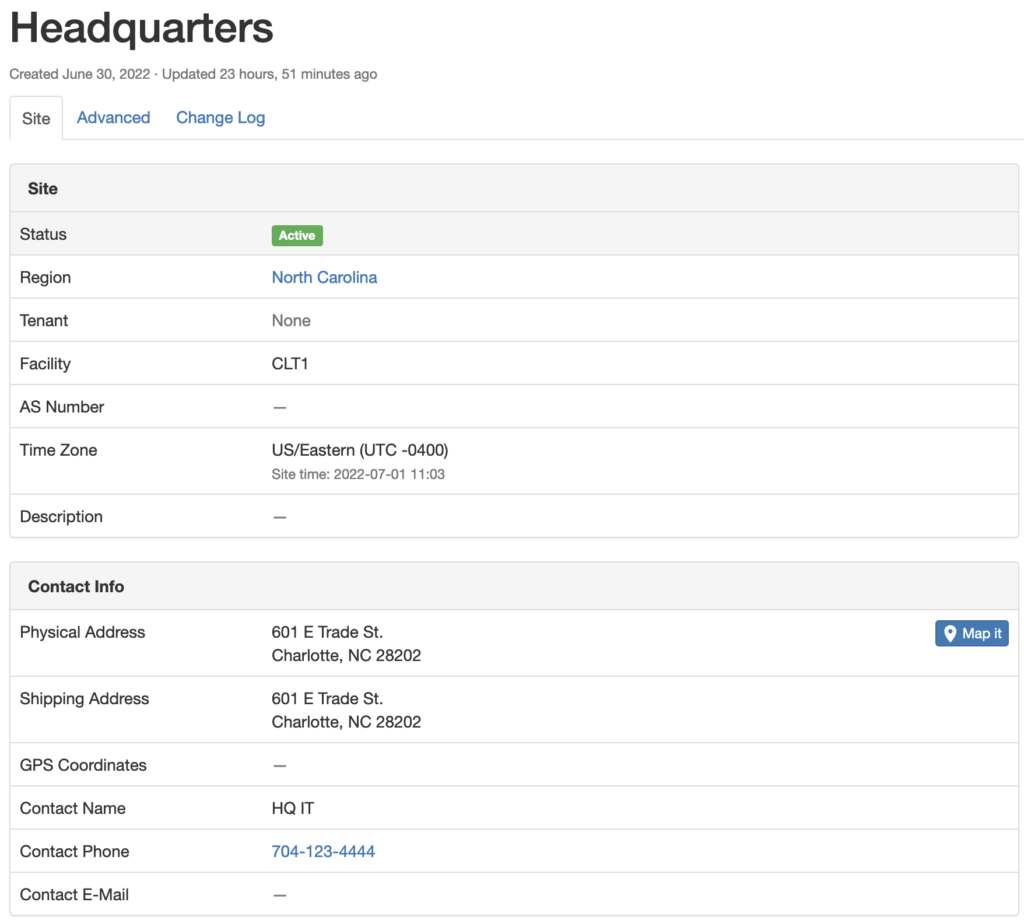

HQ-R1,Cisco,ISR 4431,KRG645782,Headquarters,601 E Trade St,Charlotte,NC,28202,USA,192.168.10.2,Access,10.20.5.5,HQ IT 704-123-4444

Below is a simple Python script to convert the CSV contents into a Python dictionary.

import csv

data = []

with open("structured-data.csv") as csv_file:

rows = csv.reader(csv_file)

for row in rows:

data.append(row)

headers = data[0]

data.pop(0)

data_dict = []

for row in data:

inventory_item = dict()

for item in range(len(row)):

inventory_item[headers[item]] = row[item]

data_dict.append(inventory_item)

When the CSV file is opened and rendered in Python, it’s converted into a list of lists. The first two lines of this representation are below.

[['Device Name', 'Manufacturer', 'Model', 'Serial Number', 'Site Name', 'Address', 'City', 'State', 'Zip', 'Country', 'Mgmt IP', 'Network Domain', 'Jump Host', 'Support'], ['HQ-R1', 'Cisco', 'ISR 4431', 'KRG645782', 'Headquarters', '601 E Trade St', 'Charlotte', 'NC', '28202', 'USA', '192.168.10.2', 'Access', '10.20.5.5', 'HQ IT 704-123-4444']]

As you can see, the first item in the list is a list of the CSV headers. The second list item is a list of the first row of the CSV. This continues until all rows are represented.

The Python script assumes the first row is the headers row, assigns a variable to that list item, and then removes that list item from the overall list. It then iterates through the list and creates a list of dictionaries that utilize the header items as dictionary keys and the row items as their corresponding dictionary values.

The result is a data structure that represents the data from the CSV file.

In [3]: data_dict[0]

Out[3]:

{'Device Name': 'HQ-R1',

'Manufacturer': 'Cisco',

'Model': 'ISR 4431',

'Serial Number': 'KRG645782',

'Site Name': 'Headquarters',

'Address': '601 E Trade St',

'City': 'Charlotte',

'State': 'NC',

'Zip': '28202',

'Country': 'USA',

'Mgmt IP': '192.168.10.2',

'Network Domain': 'Access',

'Jump Host': '10.20.5.5',

'Support': 'HQ IT 704-123-4444'}

JSON

JSON is an acronym that stands for “JavaScript Object Notation”. It is a serialization format that represents structured data in a textual format. The structured data that represents the textual string in JSON is essentially a Python dictionary.

This can be seen in the example.

In [4]: import json

In [5]: type(data_dict)

Out[5]: list

In [6]: type(data_dict[0])

Out[6]: dict

In [8]: data_dict[0]

In [8]: data_dict[0]

Out[8]:

{'Device Name': 'HQ-R1',

'Manufacturer': 'Cisco',

'Model': 'ISR 4431',

'Serial Number': 'KRG645782',

'Site Name': 'Headquarters',

'Address': '601 E Trade St',

'City': 'Charlotte',

'State': 'NC',

'Zip': '28202',

'Country': 'USA',

'Mgmt IP': '192.168.10.2',

'Network Domain': 'Access',

'Jump Host': '10.20.5.5',

'Support': 'HQ IT 704-123-4444'}

In [12]: json_data = json.dumps(data_dict[0])

In [13]: type(json_data)

Out[13]: str

In [14]: json_data

Out[14]: '{"Device Name": "HQ-R1", "Manufacturer": "Cisco", "Model": "ISR 4431", "Serial Number": "KRG645782", "Site Name": "Headquarters", "Address": "601 E Trade St", "City": "Charlotte", "State": "NC", "Zip": "28202", "Country": "USA", "Mgmt IP": "192.168.10.2", "Network Domain": "Access", "Jump Host": "10.20.5.5", "Support": "HQ IT 704-123-4444"}'

You can convert the entire data_dict into JSON utilizing the same json.dumps() method as well.

In the above example, we took the first list item from data_dict and converted it to a JSON object. JSON objects can be converted into a Python dictionary utilizing the json.loads() method.

In [17]: new_data = json.loads(json_data)

In [18]: type(new_data)

Out[18]: dict

In [19]: new_data

Out[19]:

{'Device Name': 'HQ-R1',

'Manufacturer': 'Cisco',

'Model': 'ISR 4431',

'Serial Number': 'KRG645782',

'Site Name': 'Headquarters',

'Address': '601 E Trade St',

'City': 'Charlotte',

'State': 'NC',

'Zip': '28202',

'Country': 'USA',

'Mgmt IP': '192.168.10.2',

'Network Domain': 'Access',

'Jump Host': '10.20.5.5',

'Support': 'HQ IT 704-123-4444'}

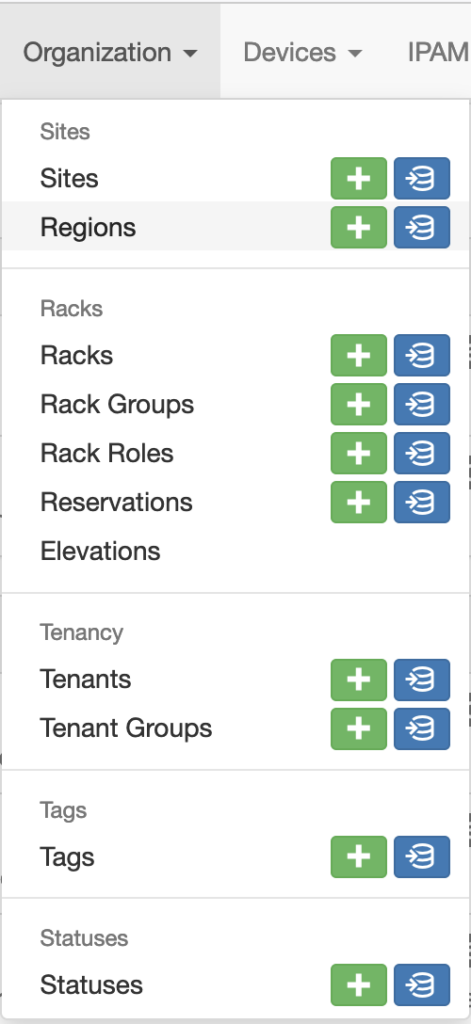

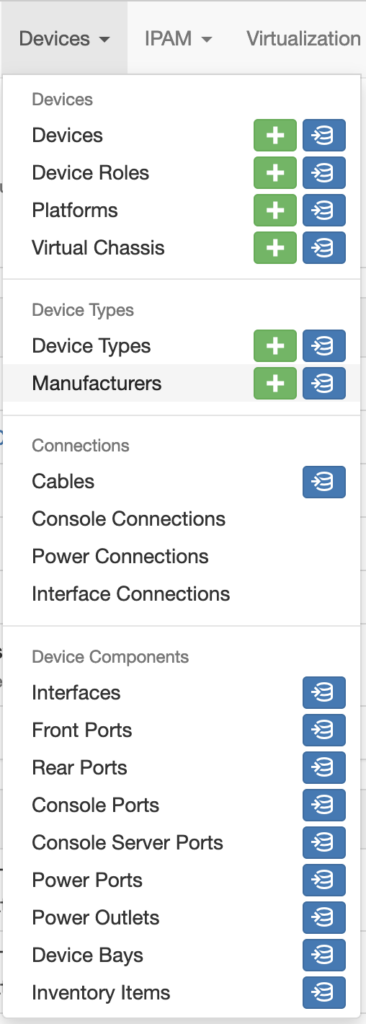

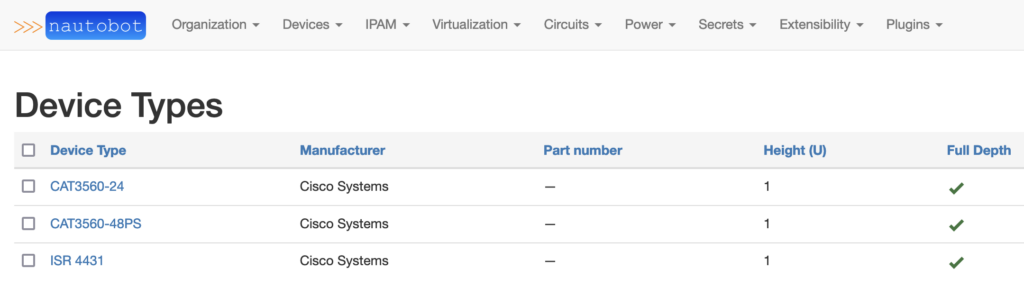

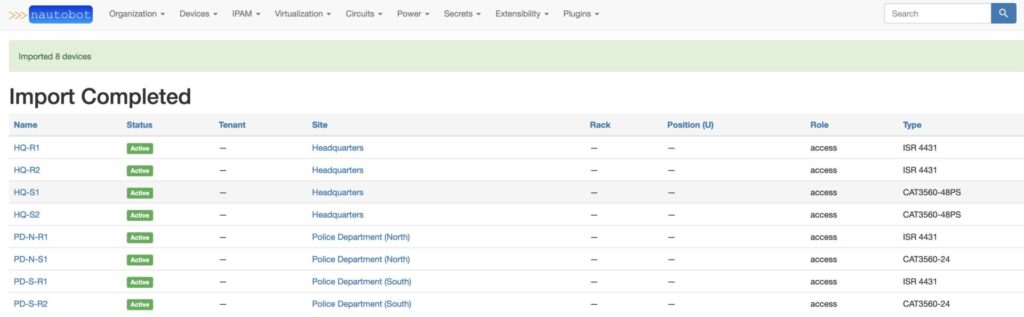

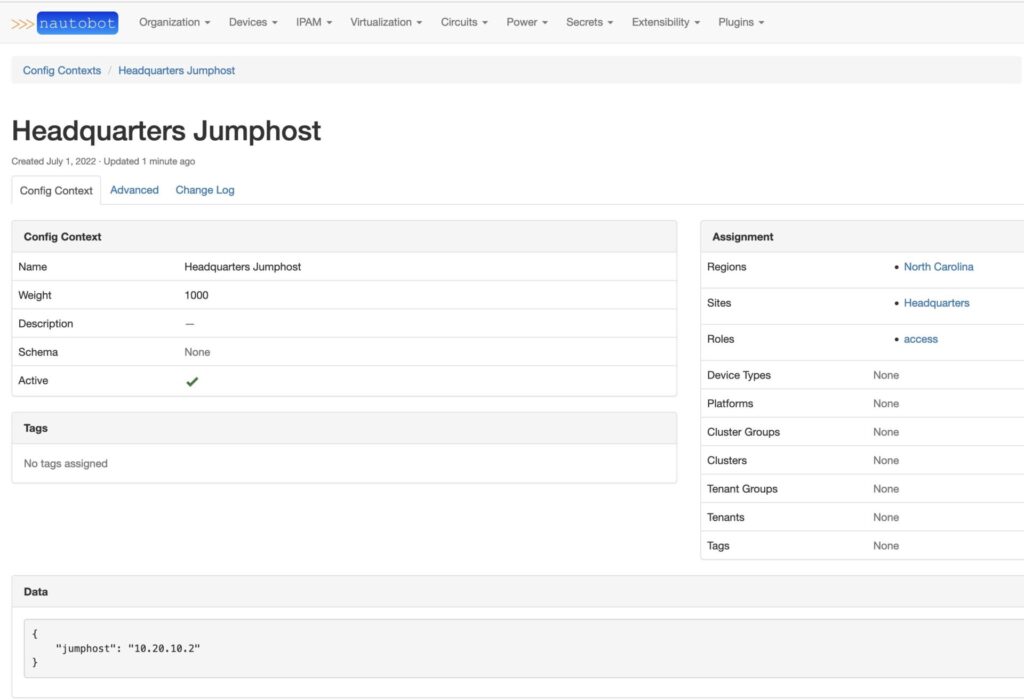

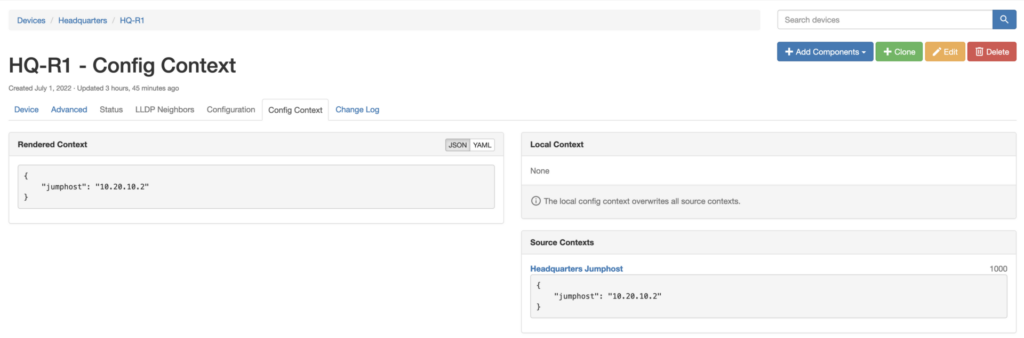

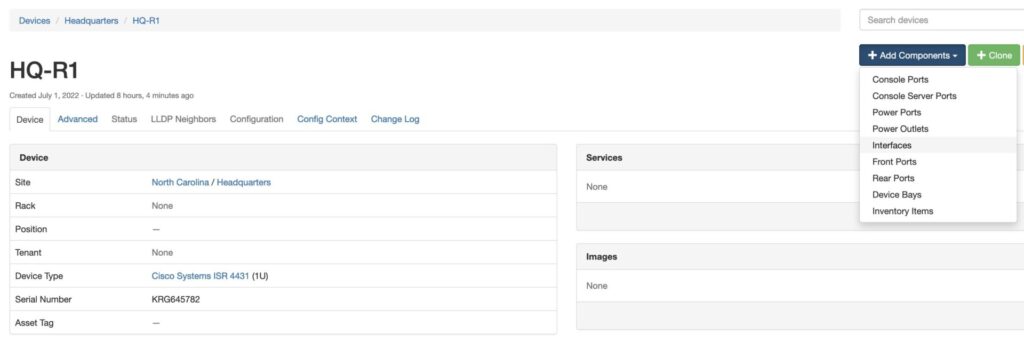

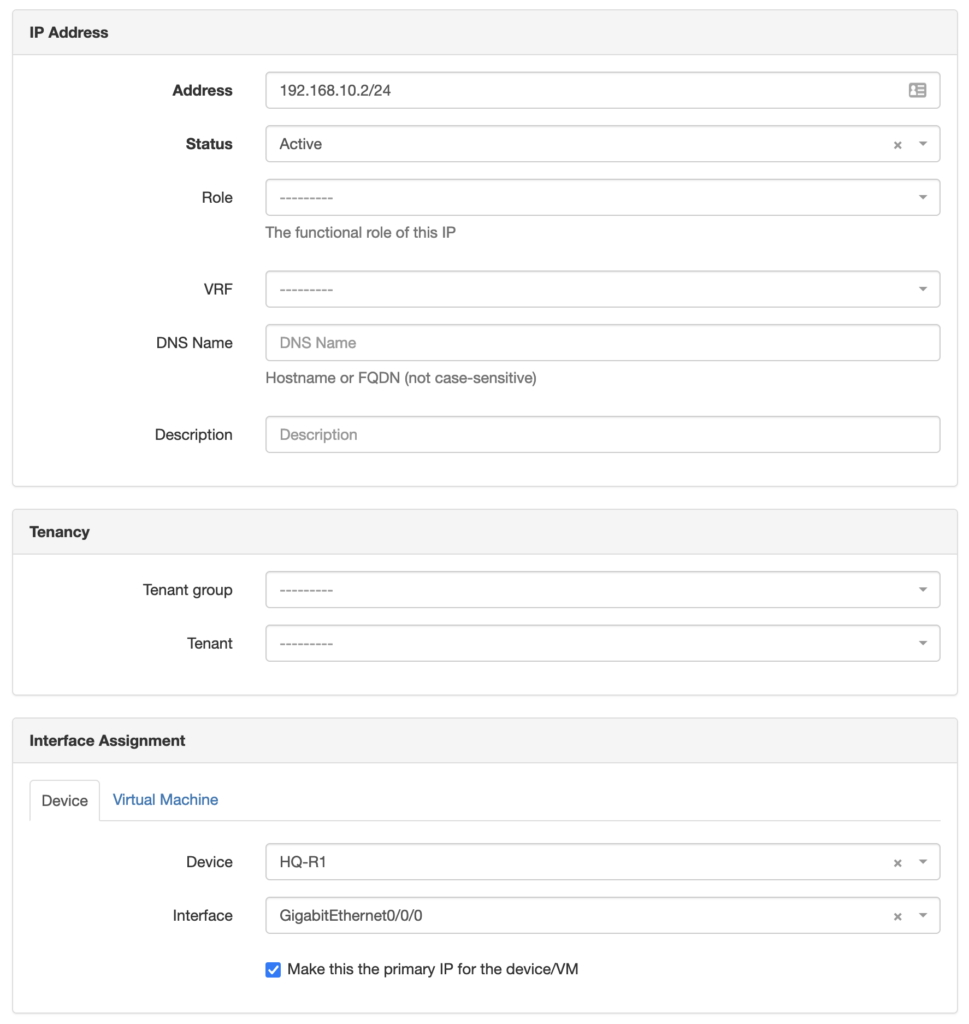

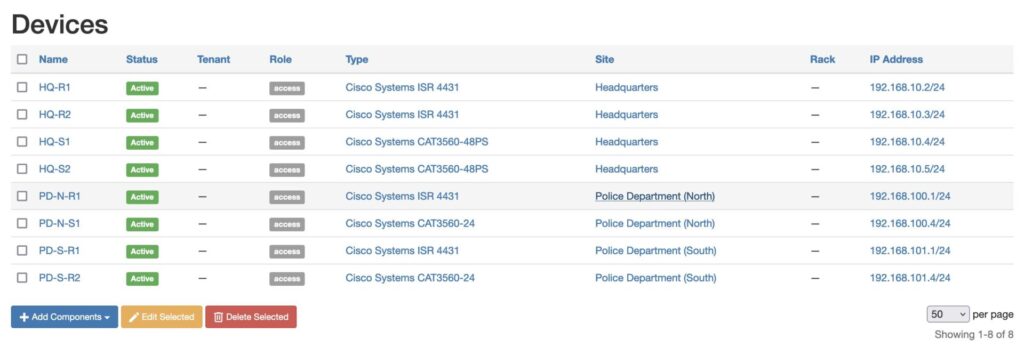

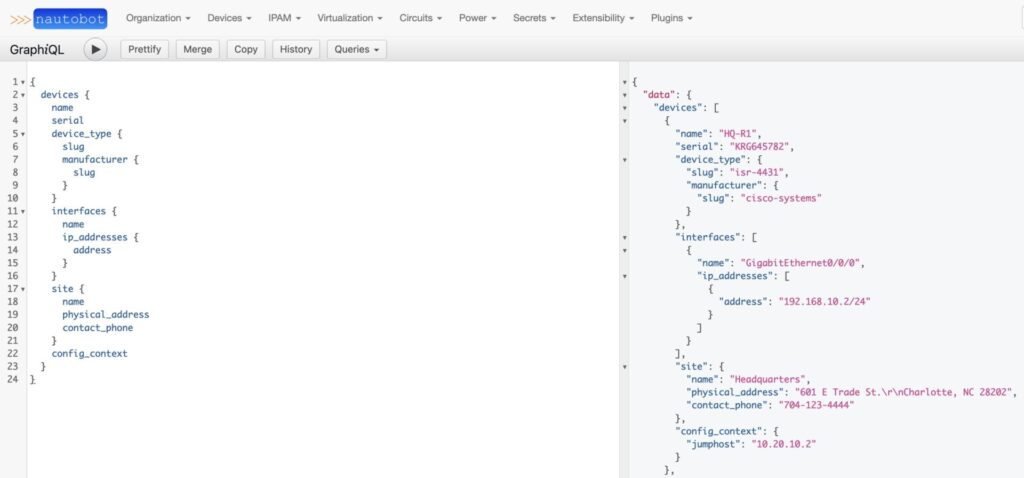

JSON is used often in modern development environments. Today, REST APIs generally use JSON as the mechanism to perform CRUD (Create, Read, Update, Delete) operations within software programmatically and to transport data between systems. Nautobot uses a REST API that allows for CRUD operations to be performed within Nautobot. All of the data payloads that are used to the API functions are in a JSON format.

XML

XML is an acronym that stands for eXtensible Markup Language. XML serves the same purpose that JSON serves. Many APIs utilize XML as their method for performing CRUD operations and transporting data between systems. Specifically in the networking programmability arena, XML is used as the method for transporting data while utilizing protocols like NETCONF to configure devices.

Let’s create an XML object based on an example data structure that we’ve utilized.

In [61]: new_data

Out[61]:

{'Device Name': 'HQ-R1',

'Manufacturer': 'Cisco',

'Model': 'ISR 4431',

'Serial Number': 'KRG645782',

'Site Name': 'Headquarters',

'Address': '601 E Trade St',

'City': 'Charlotte',

'State': 'NC',

'Zip': '28202',

'Country': 'USA',

'Mgmt IP': '192.168.10.2',

'Network Domain': 'Access',

'Jump Host': '10.20.5.5',

'Support': 'HQ IT 704-123-4444'}

from xml.etree.ElementTree import Element,tostring

site = Element("site")

for k,v in new_data.items():

child = Element(k)

child.text = str(v)

site.append(child)

<span role="button" tabindex="0" data-code="In [72]: tostring(site)

Out[72]: b'<site><Device Name>HQ-R1</Device Name><Manufacturer>Cisco</Manufacturer><Model>ISR 4431</Model><Serial Number>KRG645782</Serial Number><Site Name>Headquarters</Site Name><Address>601 E Trade St</Address><City>Charlotte</City><State>NC</State><Zip>28202</Zip><Country>USA</Country><Mgmt IP>192.168.10.2</Mgmt IP><Network Domain>Access</Network Domain><Jump Host>10.20.5.5</Jump Host><Support>HQ IT 704-123-4444</Support>

In [72]: tostring(site)

Out[72]: b'<site><Device Name>HQ-R1</Device Name><Manufacturer>Cisco</Manufacturer><Model>ISR 4431</Model><Serial Number>KRG645782</Serial Number><Site Name>Headquarters</Site Name><Address>601 E Trade St</Address><City>Charlotte</City><State>NC</State><Zip>28202</Zip><Country>USA</Country><Mgmt IP>192.168.10.2</Mgmt IP><Network Domain>Access</Network Domain><Jump Host>10.20.5.5</Jump Host><Support>HQ IT 704-123-4444</Support></site>'

With the XML object created, we can utilize the Python XML library to work with the XML object.

In [96]: for item in site:

...: print(f"{item.tag} | {item.text}")

...:

Device Name | HQ-R1

Manufacturer | Cisco

Model | ISR 4431

Serial Number | KRG645782

Site Name | Headquarters

Address | 601 E Trade St

City | Charlotte

State | NC

Zip | 28202

Country | USA

Mgmt IP | 192.168.10.2

Network Domain | Access

Jump Host | 10.20.5.5

Support | HQ IT 704-123-4444

You can also search the XML object for specific values.

In [97]: site.find("Jump Host").text

...:

Out[97]: '10.20.5.5'

YAML

YAML stands for Yet Another Markup Language. Because YAML is easy to learn and easy to read and has been widely adopted, it’s often a network engineer’s first exposure to a programmatic data structure when pursuing network automation. It’s widely used in automation tools like Ansible and Salt. (https://docs.saltproject.io/en/latest/topics/index.html).

YAML is easy to learn and easy to read. Given this, it has been widely adopted.

Let’s create a basic YAML object based on our previous examples.

import yaml

yaml_data = yaml.dump(data_dict)

print(yaml_data[0:2])

- Address: 601 E Trade St

City: Charlotte

Country: USA

Device Name: HQ-R1

Jump Host: 10.20.5.5

Manufacturer: Cisco

Mgmt IP: 192.168.10.2

Model: ISR 4431

Network Domain: Access

Serial Number: KRG645782

Site Name: Headquarters

State: NC

Support: HQ IT 704-123-4444

Zip: '28202'

- Address: 601 E Trade St

City: Charlotte

Country: USA

Device Name: HQ-R2

Jump Host: 10.20.5.5

Manufacturer: Cisco

Mgmt IP: 192.168.10.3

Model: ISR 4431

Network Domain: Access

Serial Number: KRG557862

Site Name: Headquarters

State: NC

Support: HQ IT 704-123-4444

Zip: '28202'

With the instance of yaml.dump(data_dict[0:2]), I created a YAML structure from the first two entries of our previous examples. This creates a list of two inventory items that describes their site details.

As you can see, YAML is very easy to read. Out of the programatic data structures that we’ve covered to this point, YAML is the easiest to learn and read.

Usually, as a network automation engineer, you’re not going to be creating YAML data from Python dictionaries. It’s usually the other way around. Usually, the YAML files are created by engineers to describe aspects of their device inventory. You then consume the YAML files and take action on them.

Using the yaml library, we can convert the data into a Python dictionary that we can take action on.

In [19]: yaml.safe_load(yaml_data)

Out[19]:

[{'Address': '601 E Trade St',

'City': 'Charlotte',

'Country': 'USA',

'Device Name': 'HQ-R1',

'Jump Host': '10.20.5.5',

'Manufacturer': 'Cisco',

'Mgmt IP': '192.168.10.2',

'Model': 'ISR 4431',

'Network Domain': 'Access',

'Serial Number': 'KRG645782',

'Site Name': 'Headquarters',

'State': 'NC',

'Support': 'HQ IT 704-123-4444',

'Zip': '28202'},

{'Address': '601 E Trade St',

'City': 'Charlotte',

'Country': 'USA',

'Device Name': 'HQ-R2',

'Jump Host': '10.20.5.5',

'Manufacturer': 'Cisco',

'Mgmt IP': '192.168.10.3',

'Model': 'ISR 4431',

'Network Domain': 'Access',

'Serial Number': 'KRG557862',

'Site Name': 'Headquarters',

'State': 'NC',

'Support': 'HQ IT 704-123-4444',

'Zip': '28202'}]