Blog Detail

These days, most organizations heavily leverage YAML and JSON to store and organize all sorts of data. This is done in order to define variables, be provided as input for generating device configurations, define inventory, and for many other use cases. Both YAML and JSON are very popular because both languages are very flexible and are easy to use. It is relatively easy for users who have little to no experience working with structured data (as well as for very experienced programmers) to use JSON and YAML because the formats do not require users to define a schema in order to define data.

As the use of structured data increases, the flexibility provided because these languages don’t require data to adhere to a schema create complexity and risk. If a user accidentally defines the data for ntp_servers in two different structures (e.g. one is a list, and one is a dictionary), automation tooling must be written to handle the differences in inputs in some way. Often times, the automation tooling just bombs out with a cryptic message in such cases. This is because the tool consuming this data rightfully expects to have a contract with it, that the data will adhere to a clearly defined form and thus the tool can interact with the data in a standard way. It is for this reason that APIs, when updated, should never change the format in which they provide data unless there is some way to delineate the new format (e.g. an API version increment). By ensuring data is defined in a standard way, complexity and risk can be mitigated.

With structured data languages like YAML and JSON which do not inherently define a schema (contract) for the data they define, a schema definition language can be used to provide this contract, thereby mitigating complexity and risk. Schema definition languages come with their own added maintenance though as the burden of writing the logic to ensure structured data is schema valid falls on the user. The user doesn’t just need to maintain structured data and schemas, they also have to build and maintain the tooling that checks if data is schema valid. To allow users to simply write schemas and structured data and worry less about writing and maintaining the code that bolts them together, Network to Code has developed a tool called Schema Enforcer. Today we are happy to announce that we are making Schema Enforcer available to the community.

Check it out on Github!

What is Schema Enforcer

Schema Enforcer is a framework for allowing users to define schemas for their structured data and assert that the defined structured data adheres to their schemas. This structured data can (currently) come in the the form of a data file in JSON or YAML format, or an Ansible inventory. The schema definition is defined by using the JSONSchema language in YAML or JSON format.

Why use Schema Enforcer?

If you’re familiar with JSONSchema already, you may be thinking “wait, doesn’t JSONSchema do all of this?”. JSONSchema does allow you to validate that structured data adheres to a schema definition, but it requires for you to write your own code to interact with and manage the data’s adherence to defined schema. Schema Enforcer is meant to provide a wrapper which makes it easy for users to manage structured data without needing to write their own code to check their structured data for adherence to a schema. It provides the following advantages over just using JSONSchema:

- Provides a framework for mapping data files to the schema definitions against which they should be checked for adherence

- Provides a framework for validating that Ansible inventory adheres to a schema definition or multiple schema definitions

- Prints clear log messages indicating each data object examined which is not adherent to schema, and the specific way in which these data objects are not adherent

- Allows a user to define unit tests asserting that their schema definitions are written correctly (e.g. that non-adherent data fails validation in a specific way, and adherent data passes validation)

- Exits with an exit code of

1in the event that data is not adherent to schema. This makes it fit for use in a CI pipeline along-side linters and unit tests

An Example

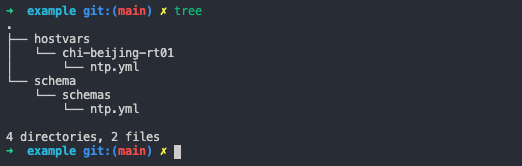

I’ve created the following directories and files in a repository called new_example.

The directory includes

- structured data (in YAML format) defining ntp servers for the host

chi-beijing-rt01inside of the file athostvars/chi-beijing-rt01/ntp.yml - a schema definition inside of the file at

schema/schemas/ntp.yml

If we examine the file at hostvars/chi-being-rt01/ntp.yml we can see the following data defined in YAML format.

# jsonschema: schemas/ntp

---

ntp_servers:

- address: 192.2.0.1

- address: 192.2.0.2

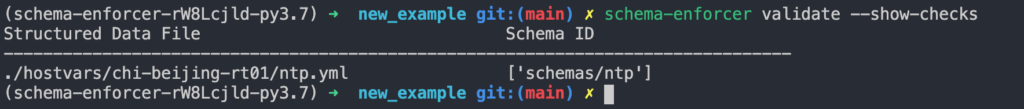

Note the comment # jsonschema: schemas/ntp at the top of the YAML file. This comment is used to declare the schema that the data in this file should be checked for adherence to, as well as the language being used to define the schema (JSONSchema here). Multiple schemas can be declared by comma separating them in the comment. For instance, the comment # jsonschema: schemas/ntp,schemas/syslog would declare that the data should be checked for adherence to two schema, one schema with the ID schemas/ntp and another with the id schemas/syslog. We can validate that this mapping is being inferred correctly by running the command schema-enforcer validate --show-checks

The

--show-checksflag shows each data file along with a list of every schema IDs it will be checked for adherence to.

Other mechanisms for mapping data files to schemas against which they should be validated. See docs/mapping_schemas.md in the Schema Enforcer git repository. for more details.

YAML supports the addition of comments using an octothorp. JSON does not support the addition of comments. To this end, only data defined in YAML format can declare the schema to which it should adhere with a comment. Another mechanism for mapping needs to be used if your data is defined in JSON format.

If we examine the file at schema/schemas/ntp.yml we can see the following schema definition. This is written in the JSONSchema language and formatted in YAML.

---

$schema: "http://json-schema.org/draft-07/schema#"

$id: "schemas/ntp"

description: "NTP Configuration schema."

type: "object"

properties:

ntp_servers:

type: "array"

items:

type: "object"

properties:

name:

type: "string"

address:

type: "string"

format: "ipv4"

vrf:

type: "string"

required:

- "address"

uniqueItems: true

additionalProperties: false

required:

- "ntp_servers"

The schema definition above is used to ensure that:

- The

ntp_serversproperty is of type hash/dictionary (object in JSONSchema parlance) - No top level keys can be defined in the data file besides

ntp_servers - It’s value is of type array/list

- Each item in this array must be unique

- Each element of this array/list is a dictionary with the possible keys

name,addressandvrf- Of these keys,

addressis required,nameandvrfcan optionally be defined, but it is not necessary to define them. addressmust be of type “string” and it must be a valid IP addressnamemust be of type “string” if it is definedvrfmust be of type “string” if it is defined

- Of these keys,

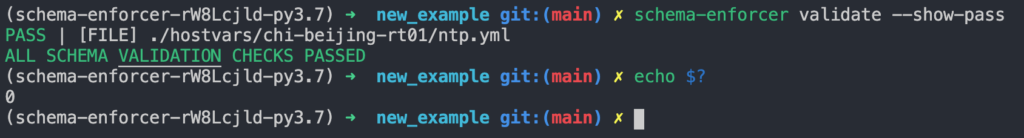

Here is an example of the structured data being checked for adherence to the schema definition.

We can see that when schema-enforcer runs, it shows that all files containing structured data are schema valid. Also note that Schema Enforcer exits with a code of 0.

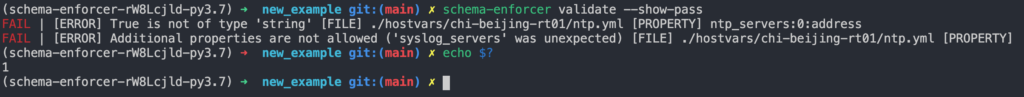

What happens if we modify the data such that the first ntp server defined has a value of the boolean true and add a syslog_servers dictionary/hash type object at the top level of the YAML file.

# jsonschema: schemas/ntp

---

ntp_servers:

- address: true

- address: 192.2.0.2

syslog_servers:

- address: 192.0.5.3

We can see that two errors are flagged. The first informs us that the first element in the array which is the value of the ntp_servers top level key is a boolean and a string was expected. The second informs us that the additional top level property syslog_servers is a property that is additional to (is not specified in) the properties defined by the schema, and that additional properties are not allowed per the schema definition. Note that schema-enforcer exits with a code of 1 indicating a failure. If Schema Enforcer were to be used before structured data is ingested into automation tools as part of a pipeline, the pipeline would never have the automation tools consume the malformed data.

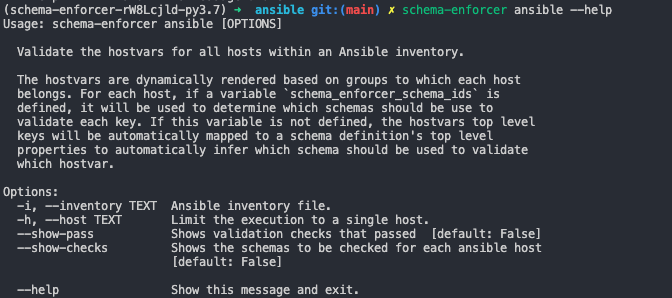

Validating Ansible Inventory

Schema Enforcer supports validating that variables defined in an Ansible inventory adhere to a schema definition (or multiple schema definitions).

To do this, Schema Enforcer first constructs a dictionary containing key/value pairs for each attribute defined in the inventory. It does this by flattening the varibles from the groups the host is a part of. After doing this, schema-enforcer maps which schemas it should use to validate the hosts variables in one of two ways:

- By using a list of schema ids defined by the

schema_enforcer_schema_idsattribute (defined at the host or group level). - By automatically mapping a schema’s top level properties to the Ansible host’s keys.

That may have been gibberish on first pass, but the examples in the following sections will hopefully make things clearer.

An Example of Validating Ansible Variables

In the following example, we have an inventory file which defines three groups, nyc, spine, and leaf. spine and leaf are children of nyc.

[nyc:children]

spine

leaf

[spine]

spine1

spine2

[leaf]

leaf1

leaf2

The group spine.yaml has two top level keys; dns_servers and interfaces.

cat group_vars/spine.yaml

---

dns_servers:

- address: true

- address: "10.2.2.2"

interfaces:

swp1:

role: "uplink"

swp2:

role: "uplink"

schema_enforcer_schema_ids:

- "schemas/dns_servers"

- "schemas/interfaces"

Note the schema_enforcer_schema_ids variable. This declaratively tells Schema Enforcer which schemas to use when running tests to ensure that the Ansible host vars for every host in the spine group are schema valid.

Here is the interfaces schema which is declared above:

bash$ cat schema/schemas/interfaces.yml

---

$schema: "http://json-schema.org/draft-07/schema#"

$id: "schemas/interfaces"

description: "Interfaces configuration schema."

type: "object"

properties:

interfaces:

type: "object"

patternProperties:

^swp.*$:

properties:

type:

type: "string"

description:

type: "string"

role:

type: "string"

Note that the $id property is what is being declared by the schema_enforcer_schema_ids variable.

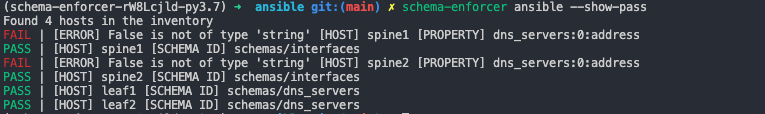

When we run the schema-enforcer ansible command with the --show-pass flag, we can see that the spine1 and spine2’s defined dns_servers attribute did not adhere to schema.

By default,

schema-enforcerprints a “FAIL” message to stdout for each object in the data file which does not adhere to schema. If no objects fail to adhere to schema definitions, a single line is printed indicating that all data files are schema valid. The--show-passflag modifies this behavior such that, in addition the the default behavior, a line is printed to stdout for every file that is schema valid indicating it passed the schema adherence check.

In looking at the group_vars/spine.yaml group above. This is because the first dns server in the list which is the value of dns_servers has a value of the boolean true.

bash$ cat schema/schemas/dns.yml

---

$schema: "http://json-schema.org/draft-07/schema#"

$id: "schemas/dns_servers"

description: "DNS Server Configuration schema."

type: "object"

properties:

dns_servers:

type: "array"

items:

type: "object"

properties:

name:

type: "string"

address:

type: "string"

format: "ipv4"

vrf:

type: "string"

required:

- "address"

uniqueItems: true

required:

- "dns_servers"

In looking at the schema for dns servers, we see that DNS servers address field must be of type string and format ipv4 (e.g. IPv4 address). Because the first element in the list of DNS servers has an address of the boolean true it is not schema valid.

Another Example of Validating Ansible Vars

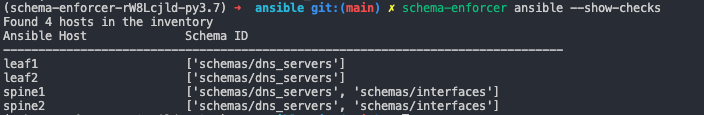

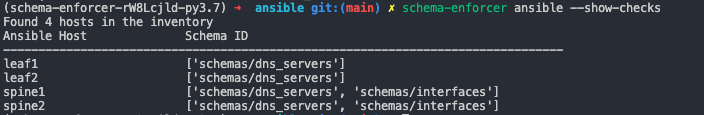

Similar to the way that schema-enforcer validate --show-checks can be used to show which data files will be checked by which schema definitions, the schema-enforcer ansible --show-checks command can be used to show which Ansible hosts will be checked for adherence to which schema IDs.

From the execution of the command, we can see that 4 hosts were loaded from inventory. This is just what we expect from our earlier examination of the .ini file which defines Ansible inventory. We just saw how spine1 and spine2 were checked for adherence to both the schemas/dns_servers and schemas/interfaces schema definitions, and how the schema_enforcer_schema_ids var was configured to declare that devices belonging to the spine group should adhere to those schemas. Lets now examine the leaf group a little more closely.

cat ansible/group_vars/leaf.yml

---

dns_servers:

- address: "10.1.1.1"

- address: "10.2.2.2"

In the leaf.yml file, no schema_enforcer_schema_ids var is configured. There is also no individual data defined at the host level for leaf1 and leaf2 which belong to the leaf group. This brings up the question, how does schema-enforcer know to check the leaf switches for adherence to the schemas/dns_servers schema definition?

The default behavior of schema-enforcer is to map the top level property in a schema definition to vars associated with each Ansible host that have the same name.

bash$ cat schema/schemas/dns.yml

---

$schema: "http://json-schema.org/draft-07/schema#"

$id: "schemas/dns_servers"

description: "DNS Server Configuration schema."

type: "object"

properties:

dns_servers:

type: "array"

items:

type: "object"

properties:

name:

type: "string"

address:

type: "string"

format: "ipv4"

vrf:

type: "string"

required:

- "address"

uniqueItems: true

required:

- "dns_servers"

Because the property defined in the schema definition above is dns_servers, the matching Ansible host var dns_servers will be checked for adherence against it.

In fact, if we make the following changes to the leaf group var definition then run schema-enforcer --show-checks, we can see that devices belonging to the leaf group are now slated to be checked for adherence to both the schemas/dns_servers and schemas/interfaces schema definitions.

cat ansible/group_vars/leaf.yml

---

dns_servers:

- address: "10.1.1.1"

- address: "10.2.2.2"

interfaces:

swp01:

role: uplink

Using Schema Enforcer

O.K. so you’ve defined schemas for your data, now what? Here are a couple of use cases for Schema Enforcer we’ve found to be “juice worth the squeeze.”

1) Use Schema Enforcer in your CI system to validate defined structured data before merging code. Virtually all git version control systems (GitHub, GitLab…etc) allow the ability to configure tests which must pass before code can be merged from a feature branch into the code base. Schema Enforcer can be turned on along side your other tests (unit tests, linters…etc). If your data is not schema valid, the exact reason why the data is not schema valid will be printed to the output of the CI system when the tool is run and the tool will exit with a code of 1 causing the CI system to register a failure. When the CI system sees a failure, it will not allow the merge of data which is not adherent to schema.

2) Use it in a pipeline. Say you have YAML structured data which defines the configuration for network devices. You can run schema enforcer as part of a pipeline and run it before automation tooling (Ansible, Python…etc) consumes this data in order to render configurations for devices. If the data isn’t schema valid, the pipeline will fail before rendering configurations and pushing them to devices (or exploding with a stack trace that takes you 30 minutes and lots of googling to troubleshoot).

3) Run it after your tooling generates structured data and prints it to a file. In this case, Schema Enforcer can act as a sanity check to ensure that your tooling is dumping correctly structured output.

Where Are We Going Next

We plan to add the support for the following features to Schema Enforcer in the future:

- Validation of Nornir inventory attributes

- Business logic validation

Conclusion

Have a use case for Schema Enforcer? Try it out and let us know what you think! Do you want the ability to write schema definitions in YANG or have another cool idea? We are iterating on the tool and we are open to feedback!

-Phillip Simonds

Contact Us to Learn More

Share details about yourself & someone from our team will reach out to you ASAP!