Blog Detail

With many people being asked to work from home, we have heard several customers looking to enhance the visibiility and monitoring of their VPN infrastructure. In this post I will show you how you can quickly collect information from your Cisco ASA firewall leveraging Netmiko, NTC-Templates (TextFSM), combined with Telegraf/Prometheus/Grafana. The approach would work on other network devices, not just Cisco ASAs. Considering the recent demand for ASA information, we will use this as an example.

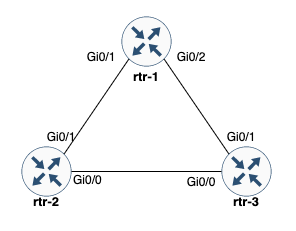

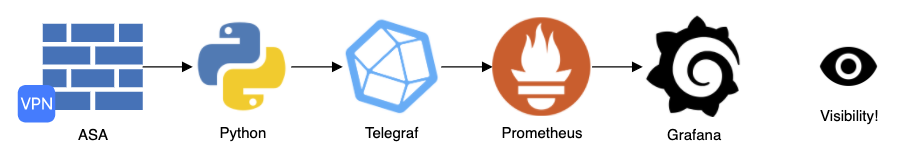

Here is what the data flow will look like:

- Users will connect to the ASA for remote access VPN services

- Python: Collects information from the device via CLI and gets structured data by using a NEW template to parse the CLI output. This is presented via stdout in Influx data format

- Telegraf: Generic collector that has multiple plugins to ingest data and that can send data to many databases out there.

- INPUT: Execute the Python script every 60s and read the results from stdout.

- OUTPUT: Expose the data over HTTP in a format compatible with Prometheus

- Prometheus: Time Series DataBase (TSDB). Collects the data from Telegraf over HTTP, stores it, and exposes an API to query the data

- Grafana: Solution to build dashboards, natively support Prometheus to query data.

An alternative to creating this Python script, you could have looked at using the Telegraf SNMP plugin as well. An SNMP query would be quicker than using SSH and getting data if you want basic counts. In this you will see that you can get custom metrics into a monitoring solution without having to use only SNMP.

Execution of Python

If executing just the Python script without being executed by Telegraf, this is what you would see:

$ python3 asa_anyconnect_to_telegraf.py --host 10.250.0.63

asa connected_users=1i,anyconnect_licenses=2i

This data will then get transformed by Telegraf into an output that is usable by Prometheus. It is possible to remove the requirement for Telegraf and have Python create the Prometheus Metrics. We wanted to keep the Python execution as simple as possible. To use the prometheus_client library check out their Github page.

Python Script

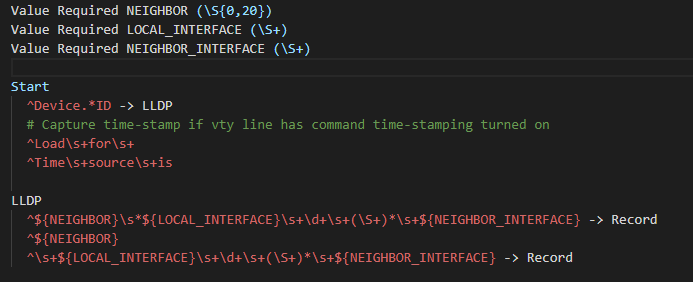

In this post we have the following components being used:

- Python:

- Netmiko to SSH into an ASA, gather command output, and leverage the corresponding NTC Template

- NTC Template which is a TextFSM template for parsing raw text output into structured data

- Telegraf: Takes the output of the Python script as an input and translates it to Prometheus metrics as an output

Python Requirements

The Python script below will have the following requirements set up before hand:

- ENV Variables for authentication into the ASA

- ASA_USER: Username to log into the ASA

- ASA_PASSWORD: Password to log into the ASA

- ASA_SECRET (Optional): Enable password for the ASA, if left undefined will pick up the ASA_PASSWORD variable

- Required Python Packages:

- Netmiko: For SSH and parsing

- Click: For argument handling – to get the hostname/IP address of the ASA

- Github Repository for NTC-Templates setup in one of two ways:

- Cloned to user home directory

cd ~andgit clone https://github.com/networktocode/ntc-templates.git - NET_TEXTFSM Env variable set

NET_TEXTFSM=/path/to/ntc-templates/templates/

- Cloned to user home directory

The specific template is the newer templatefor cisco asa show vpn-sessiondb anyconnect introduced March 18, 2020

Python Code

There are two functions used in this quick script:

"""

(c) 2020 Network to Code

Licensed under the Apache License, Version 2.0 (the "License").

You may not use this file except in compliance with the License.

You may obtain a copy of the License at

http: // www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

Python application to gather metrics from Cisco ASA firewall and export them as a metric for Telegraf

"""

from itertools import count

import os

import sys

import re

import click

from netmiko import ConnectHandler

def print_influx_metrics(data):

"""

The print_influx_metrics function takes the data collected in a dictionary format and prints out

each of the necesary components on a single line, which matches the Influx data format.

Args:

data (dictionary): Dictionary of the results to print out for influx

"""

data_string = ""

cnt = count()

for measure, value in data.items():

if next(cnt) > 0:

data_string += ","

data_string += f"{measure}={value}i"

print(f"asa {data_string}")

return True

def get_anyconnect_license_count(version_output):

"""

Searches through the `show version` output to find all instances of the license and gets the

output into integers to get a license count.

Since there could be multiple ASAs in a cluster or HA pair, it is necessary to gather multiple data

points for the license count that the ASAs are licensed for. This function uses regex to find all of

the instances and returns the total count based on the `show version` command output.

Args:

version_output (String): Output from Cisco ASA `show version`

"""

pattern = r"AnyConnect\s+Premium\s+Peers\s+:\s+(\d+)"

re_list = re.findall(pattern, version_output)

total_licenses = 0

for license_count in re_list:

total_licenses += int(license_count)

return total_licenses

# Add parsers for output of data types

@click.command()

@click.option("--host", required=True, help="Required - Host to connect to")

def main(host):

"""

Main code execution

"""

# Get ASA connection Information

try:

username = os.environ["ASA_USER"]

password = os.environ["ASA_PASSWORD"]

secret = os.getenv("ASA_SECRET", os.environ["ASA_PASSWORD"])

except KeyError:

print("Unable to find Username or Password in environment variables")

print("Please verify that ASA_USER and ASA_PASSWORD are set")

sys.exit(1)

# Setup connection information and connect to host

cisco_asa_device = {

"host": host,

"username": username,

"password": password,

"secret": secret,

"device_type": "cisco_asa",

}

net_conn = ConnectHandler(**cisco_asa_device)

# Get command output for data collection

command = "show vpn-sessiondb anyconnect"

command_output = net_conn.send_command(command, use_textfsm=True)

# Check for no connected users

if "INFO: There are presently no active sessions" in command_output:

command_output = []

# Get output of "show version"

version_output = net_conn.send_command("show version")

# Set data variable for output to Influx format

data = {"connected_users": len(command_output), "anyconnect_licenses": get_anyconnect_license_count(version_output)}

# Print out the metrics to standard out to be picked up by Telegraf

print_influx_metrics(data)

if __name__ == "__main__":

main()

Telegraf

Now that the data is being output via the stdout of the script, you will need to have an application read this data and transform it. This could be done in other ways as well, but Telegraf has this function built in already.

Telegraf will be setup to execute the Python script every minute. Then the output will be transformed by defining the output.

Telegraf Configuration

The configuration for this example is as follows:

# Globally set tags that shuld be set to meaningful tags for searching inside of a TSDB

[agent]

hostname = "demo"

[global_tags]

device = "10.250.0.63"

region = "midwest"

[[inputs.exec]]

## Interval is how often the execution should occur, here every 1 min (60 seconds)

interval = "60s"

# Commands to be executed in list format

# To execute against multiple hosts, add multiple entries within the commands

commands = [

"python3 asa_anyconnect_to_telegraf.py --host 10.250.0.63"

]

## Timeout for each command to complete.

# Tests in lab environment next to the device with local authentication has been 6 seconds

timeout = "15s"

## Measurement name suffix (for separating different commands)

name_suffix = "_parsed"

## Data format to consume.

## Each data format has its own unique set of configuration options, read

## More about them here:

## https://github.com/influxdata/telegraf/blob/master/docs/DATA_FORMATS_INPUT.md

data_format = "influx"

# Output to Prometheus Metrics format

# Define the listen port for which TCP port the web server will be listening on. Metrics will be

# available at "http://localhost:9222/metrics" in this instance.

# There are two versions of metrics and if `metric_version` is omitted then version 1 is used

[[outputs.prometheus_client]]

listen = ":9222"

metric_version = 2

Telegraf Output Example

Here is what the metrics will look like when exposed, without the default Telegraf information metrics.

# HELP asa_parsed_anyconnect_licenses Telegraf collected metric

# TYPE asa_parsed_anyconnect_licenses untyped

asa_parsed_anyconnect_licenses{device="10.250.0.63",host="demo",region="midwest"} 2

# HELP asa_parsed_connected_users Telegraf collected metric

# TYPE asa_parsed_connected_users untyped

asa_parsed_connected_users{device="10.250.0.63",host="demo",region="midwest"} 1

There are two metrics of anyconnect_licenses and connected_users that will get scraped. There are a total of 2 Anyconnect licenses available on this firewall with a single user connected. This can now get scraped by the Prometheus configuration and give insight to your ASA Anyconnect environment.

Prometheus Installation

There are several of options for installing a Prometheus TSDB (Time Series DataBase)including:

- Precompiled binaries for Windows, Mac, and Linux

- Docker images

- Building from source

To get more details on installation options take a look at the Prometheus Github page.

Once installed you can navigate to the Prometheus API query page by going to http://<prometheus_host>:9090. You will then be presented with a search bar. This is where you can start a query for your metric that you wish to graph, such as start typing asa. Prometheus will help with an autocomplete set of options in the search bar. Once you have selected what you wish to query you can select Execute. This will give you a value at this point. To see what the query looks like over time you can select Graph next to the world console to give you a graph over time. Grafana will then use the same query language to add a graph.

Once up and running, you add your Telegraf host to the scraping configuration and Prometheus will start to scrape the HTML page provided and add the associated metrics into its TSDB.

A good video tutorial for getting started with Prometheus queries with network equipment can be found on YouTube from NANOG 77

Grafana Installation

Grafana is the dashboarding component of choice in the open source community. Grafana is able to use several sources to create graphs including modern TSDBs of InfluxDB and Prometheus. With the latest release, Grafana can even use Google Sheets as a datasource.

As you get going with Grafana there are pre-built dashboards available for download.

TYou will want to download Grafana to get started. There are several installation methods available on their download page including options for:

- Linux

- Windows

- Mac

- Docker

- ARM (Raspberry Pi)

The Prometheus website has an article that is helpful for getting started with your own Prometheus dashboards.

If the video just above this link does not show up, you can see the video on the Network to Code YouTube.

In the next post you can see how to monitor websites and DNS queries will include how to alert using this technology stack.

-Josh

Tags :

Contact Us to Learn More

Share details about yourself & someone from our team will reach out to you ASAP!