Automation Principles – Data Normalization

This is part of a series of posts to help establish sound Network Automation Principles.

Providing a common method to interact between various different systems is a fairly pervasive idea throughout technology. Within your first days of learning about traditional networking, you will inevitably hear about the OSI model. The concept being that each layer provides an interface from one layer and to another layer. The point is, there must be an agreement between those interfaces.

The same is true with data, which poses a problem within the Network space, as most interfaces to the network are via vendor- specific CLI, API, etc. This is what makes a uniform YANG model via an open modeling standard such as Open Config or IETF models so attractive.

The problem for adoption for such a standard is multifaceted. While cynics believe it is a vendor ploy to keep vendor lock-in, I think it is a bit more nuanced than that. Without spending too much time on the subject, here are some points to consider.

- Vendor-neutral models are by nature complex, as they should contain the superset of all features

- Complexity makes it more difficult to use the product

- Despite the complexity, vendor-neutral models always seem to lack a core feature to any given vendor

- Vendors have to extend models to support “their differentiators” or features, which is complex and subject to future issues

- Data does not always map easily from the vendor’s model to any other model

- All of this makes it complex to actually build out, if these features are not built from within the OS to start with

That’s a huge topic, with a 30,000-foot view of some pros/cons, no reason to dive deeper now.

Data Normalization in Computer Science

The construct on agreed upon interfaces has many names in Computer Science, depending on the context. While not all are related to data specifically, the concept remains the same.

- Interface – as the generic term to define how two systems connect, not to be confused with a “network interface”.

- API (or Application Programming Interface) – which is not always a REST API, is an agreed upon interface.

- Contract – as a term to to reinforce the idea that there is an agreed upon standard between two systems.

- Signature – as a type enforced definition of a function.

As mentioned, some of these terms are specific to a context, such as signature being more associated with a function, but these are all terms you will here often that describe the basic concepts.

Data Normalization in NAPALM

NAPALM provides a series of “getters”; these are basically what a Network Engineer would call “show commands” in structured data and normalized. Let’s observe the following example, taken from the get_arp_table doc string.

Returns a list of dictionaries having the following set of keys:

* interface (string)

* mac (string)

* ip (string)

* age (float)

Example::

[

{

'interface' : 'MgmtEth0/RSP0/CPU0/0',

'mac' : '5C:5E:AB:DA:3C:F0',

'ip' : '172.17.17.1',

'age' : 1454496274.84

},

{

'interface' : 'MgmtEth0/RSP0/CPU0/0',

'mac' : '5C:5E:AB:DA:3C:FF',

'ip' : '172.17.17.2',

'age' : 1435641582.49

}

]

What you will observe here is there is no mention of vendor, and there is seemingly nothing unique about this data to tie it to any single vendor. This allows the developer to make programmatic decisions in a single way, regardless of vendor. The way in which data is normalized is up to the author of the specific NAPALM driver.

import sys

from napalm import get_network_driver

from my_custom_inventory import get_device_details

network_os, ip, username, password = get_device_details(sys.argv[1])

driver = get_network_driver(network_os)

with driver(ip, username, password) as device:

arp_table = device.get_arp_table()

for arp_entry in arp_table:

if arp_entry['interface'].startswith("TenGigabitEthernet"):

print(f"Found 10Gb port {arp_entry['interface']}")

From the above snippet, you can see that regardless of what the fictional function get_device_details returns for a valid network OS, the process will remain the same. The hard work of performing the data normalization still has to happen within the respective NAPALM driver. That may mean connecting to the device, running CLI commands, then parsing; or that could mean making an API call and transposing the data structure from the vendors to what NAPALM expects.

Configuration Data Normalization

Considerations for building out your own normalized data model:

- Do not follow the vendor’s syntax, this simply pushes the problem along

- Having thousands of configuration “nerd nobs” requires expert-level understanding of a data model that will not likely be as well documented or understood as the vendor’s CLI. The point is to remove complexity, not shift complexity

- Express the business intention, not the vendor configuration

- Abstract the uniqueness of the OS implementation away from from intent

- Express the greatest amount of configuration state in the least amount of actual data

Generally speaking, when I am building a data model, I try to build it to be normalized. While not always achievable on day 1 (due to lacking complete understanding of requirements or lacking imagination) the thought process is always there. Even if dealing with a single vendor, the first question I will ask is “would this work for another vendor?”

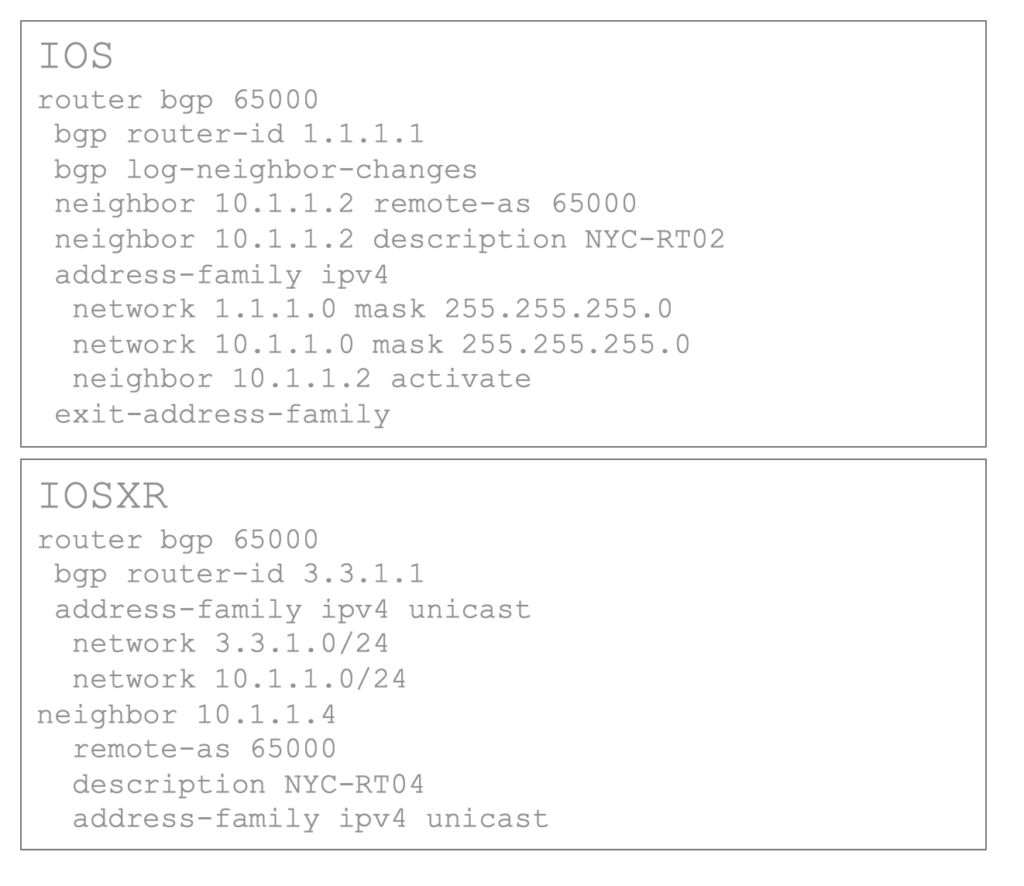

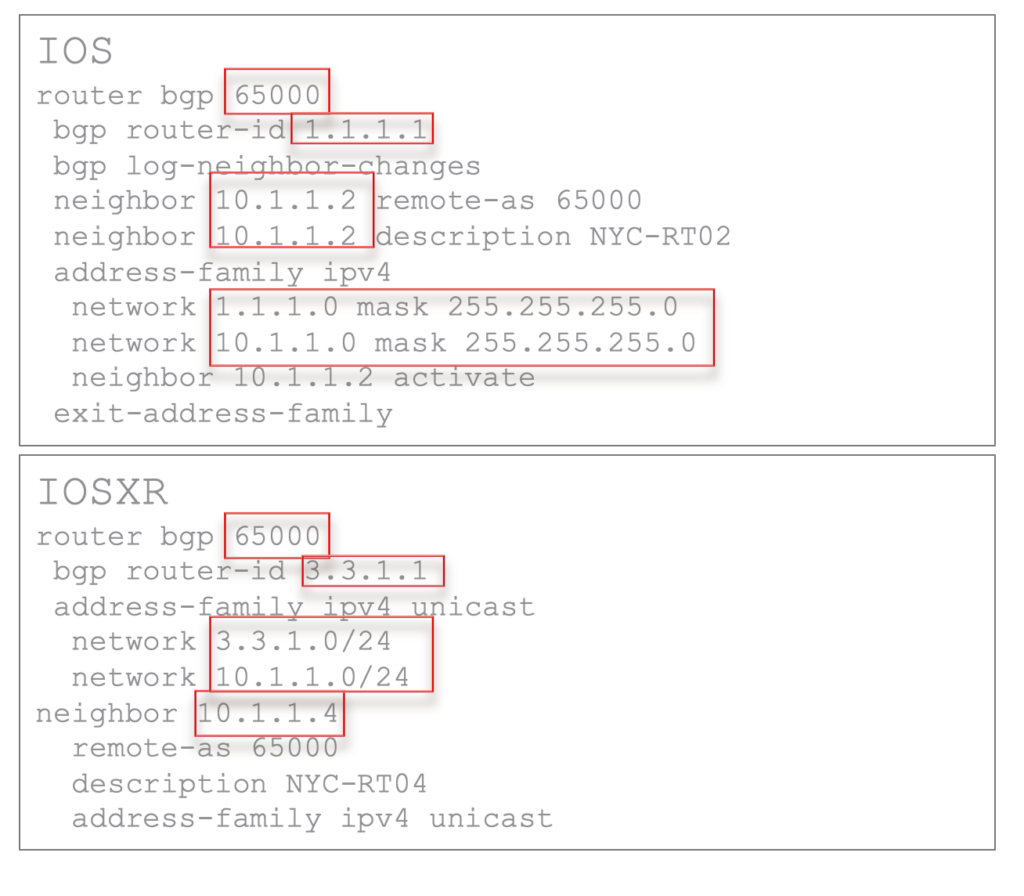

Reviewing the following configurations from multiple vendors:

I will pick out what is unique from the configuration, and thus a variable.

Based on observation of the above configurations, the following normalized data structure was created.

bgp:

asn: 6500

networks:

- "1.1.1.0/24"

- "1.1.2.0/24"

- "1.1.3.0/24"

neighbors:

- description: "NYC-RT02"

ip: "10.10.10.2"

- description: "NYC-RT03"

ip: "10.10.10.3"

- description: "NYC-RT04"

ip: "10.10.10.4"

With this data-normalized model in mind, you can quickly see how the below template can be applied.

router bgp {{ bgp['asn'] }}

router-id {{ bgp['id'] }}

address-family ipv4 unicast

{% for net in bgp['networks'] %}

network {{ net }}

{% endfor %}

{% for neighbor in bgp['neighbors'] %}

neighbor {{ neighbor['ip'] }} remote-as {{ neighbor['asn'] }}

description {{ neighbor['description'] }}

address-family ipv4 unicast

{% endfor %}

In this example, the Jinja template provides the glue between a normalized data model and the vendor-specific configuration. Jinja is just used as an example; this could just as easily be converted to an operation with REST API, NETCONF, or any other vendor’s syntax.

The Case for Localized Simple Normalized Data Models

Within Network to Code, we have found that simple normalized data models tend to get more traction than the more complex ones. While it is clear that each enterprise building its own normalized data model is not exactly efficient either—since each organization is having to reinvent the wheel—the adoption tends to offset that inefficiency.

Perhaps there is room within the community for some improvement here, such as creating a venue to easily publish data models and have others consume those data models. This can serve as inspiration, a starting point, and a means of comparison of various different normalized data models without the rigor that is required for solidified data models that would come from OC/IETF.

Data Normalization Enforcement

Enforcing normalized data models is filled with tools. This will be covered in more detail within the Data Model blog, but here are a few:

- JSON Schema

- Any relational database

- Kwalify

You may even find a utility called Schema Enforcer valuable if you’re looking at using JSON Schema for data model enforcement within a CI pipeline. Check out this intro blog if you’re interested.

Conclusion

There are many ways to normalize data, and many times when the vendor syntax or output is nearly the same. You may be able to reuse the exact same code from one vendor to another. By creating normalized data models, you can better prepare for future use cases, remove some amount of vendor lock-in, and provide a consistent developer experience across vendors.

Creating normalized data models takes some practice to get right, but it is a skill that can be honed over time and truly provide a richer experience.

-Ken

Contact Us to Learn More

Share details about yourself & someone from our team will reach out to you ASAP!