Docker is a power tool for deploying applications or services, and there are numerous Docker orchestration tools available that can help to simplify the management of the deployed containers. But what if you are wanting to deploy a small number of services and not wanting to undertake setting up and managing another application stack just to run a handful of containers. I will cover how I deployed a handful of services on a single Docker host. The services I deployed are LetsEncrypt to generate a wildcard certificate, Route 53 to register A and CNAME records, and NGINX to do reverse proxy with SNI encapsulation. I previously had some of these services deployed in containers on a Raspberry Pi as part of my Aquarium Controller, but I wanted to provide better flexibility for deployment and not pigeonhole myself to deploying only ARM-compatible containers. That, combined with wanting persistent services deployed at home, is what led me to building a new physical Linux host running Ubtunu 20.04 LTS & Docker.

This post is meant to show the flexibility of Docker not a production guide on deploying with Docker. I fully recommend using orchestration when deploying containers and would have deployed via container orchestration if I were working with multiple hosts or was managing more services.

In this post, I will be using my InfluxDB service as the example service. This service is used from both outside the Docker host via NGINX reverse proxy and for east/west container-to-container communication. Also, all of my services are defined as code via docker-compose to provide an easier experience vs raw docker run commands.

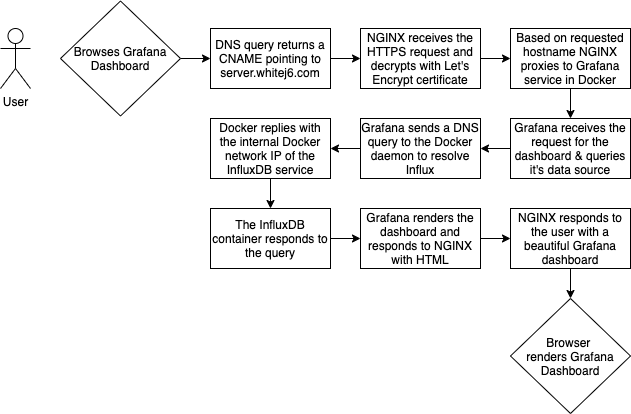

Below is an example of a communication flow for a user consuming a service deployed in Docker that then consumes another backend service on the same host. The end user is none the wiser on how the traffic is flowing, and as the administrator you are able to take advantage of container-to-container communication.

I am using Route 53 to register all of my DNS records; this simplifies the amount of services I am managing on premise by not running a service like Bind 9 DNS. All of the records I am publishing in Route53 resolve to private IP addresses and are not routeable from outside my network. In my examples I have a single A record for the physical host itself and all services are CNAME records pointing to the server’s A record. My domain name was registered with Route 53, which helps to streamline the process.

Docker provides networks that are internal to the Docker daemon and the ability to perform container name resolution for containers that are on the same Docker network. To simplify the declaration of these supporting services, I am using docker-compose; and to communicate east/west within containers I only have to send traffic to the adjacent service name. In the above diagram, the Grafana service communicates to the InfluxDB service via http://influxdb:8086/. Docker resolves the hostname influxdb to the IP address of the InfluxDB container.

Although the services are deployed on my local home network and are behind an appropriate firewall, I prefer to deploy services with TLS from day one. This is a best practice that was instilled in me from day one of my days in enterprise environments. In my example, I am using CertBot to request and manage my certificate. To simplify certificate management I am using a wildcard cert of *.whitej6.com for all services. Also, an extension of TLS is server name indication or SNI

All the services that are meant to be consumed from outside the Docker daemon are only exposed to localhost within the port definition of the service. This ensures ALL traffic that I want to allow into Docker must come from the physical host or an application that is deployed to the host. Each of the services that I need to expose have their own definition in an NGINX configuration file. The configuration tells NGINX which certificate to use, which requested server name maps to which underlying localhost port number. When NGINX receives an HTTPS request it first determines which service is requested via SNI and then performs a reverse proxy to the correct port on localhost. This allows me to terminate TLS on the physical host and run plain text protocols from NGINX to the underlying Docker service. By performing my own TLS termination in a secure manner outside containers, it can simplify container deployment, reduce the need to customize a vendor-provided container, and/or figure out how each vendor performs TLS termination in their containers.

The example used is an actual depiction of services I am hosting within my home.

This example will not be covering how to install Ubuntu, Docker, docker-compose, CertBot, or NGINX. These items have well documented installations and should be referenced. The example will cover the consumption of these services.

I am using Route 53 to host DNS records needed for the applications deployed in the Docker stack. Below is a table of the two records that are needed for deploying. At a later step in the example, I will create a third record that is required for generating my certificate.

| Record Type | Source | Destination |

|---|---|---|

| A | ubuntu-server.whitej6.com | 10.0.0.16 |

| CNAME | influxdb.whitej6.com | ubuntu-server.whitej6.com |

You can see in the output that influxdb.whitej6.com is a CNAME for ubuntu-server.whitej6.com that resolves to 10.0.0.16

➜ ~ dig influxdb.whitej6.com

; <<>> DiG 9.10.6 <<>> influxdb.whitej6.com

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 61162

;; flags: qr rd ra; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 512

;; QUESTION SECTION:

;influxdb.whitej6.com. IN A

;; ANSWER SECTION:

influxdb.whitej6.com. 236 IN CNAME ubuntu-server.whitej6.com.

ubuntu-server.whitej6.com. 238 IN A 10.0.0.16

;; Query time: 21 msec

;; SERVER: 8.8.8.8#53(8.8.8.8)

;; WHEN: Tue Apr 06 13:44:41 CDT 2021

;; MSG SIZE rcvd: 93

➜ ~

Using Cerbot to generate my Let’s Encrypt certificate provides a simple end-to-end solution for generating and managing a signed certificate. There are a few different challenges that can be used to validate domain ownership. Since I have minimal experience with Certbot and I can easily administer my DNS records in my domain, I chose to use a DNS challenge. In the example below you will see I issue the command and Certbot responds with a TXT record I need to create for _acme-challenge.whitej6.com with a value of XXXXXX-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX. After I create the record, I can then hit Enter to continue and Certbot will perform the challenge and validate whether the value of the TXT record matches what was expected. Let’s Encrypt certificates are short-lived certificates that last 90 days. Certbot supports renewing the certificates via certbot renew command.

➜ ~ sudo certbot -d "*.whitej6.com" --manual --preferred-challenges dns certonly

Saving debug log to /var/log/letsencrypt/letsencrypt.log

Plugins selected: Authenticator manual, Installer None

Requesting a certificate for *.whitej6.com

Performing the following challenges:

dns-01 challenge for whitej6.com

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

Please deploy a DNS TXT record under the name

_acme-challenge.whitej6.com with the following value:

XXXXXX-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

Before continuing, verify the record is deployed.

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

Press Enter to Continue

Waiting for verification...

Cleaning up challenges

IMPORTANT NOTES:

- Congratulations! Your certificate and chain have been saved at:

/etc/letsencrypt/live/whitej6.com/fullchain.pem

Your key file has been saved at:

/etc/letsencrypt/live/whitej6.com/privkey.pem

Your certificate will expire on 2021-07-05. To obtain a new or

tweaked version of this certificate in the future, simply run

certbot again. To non-interactively renew *all* of your

certificates, run "certbot renew"

- If you like Certbot, please consider supporting our work by:

Donating to ISRG / Let's Encrypt: https://letsencrypt.org/donate

Donating to EFF: https://eff.org/donate-le

➜ ~

The deployment and management of containers is done via docker-compose to simplify the management of Docker.

---

version: "3"

services:

influxdb:

image: influxdb:latest

restart: always

ports:

- "127.0.0.1:8086:8086"

volumes:

- "/influxdb:/var/lib/influxdb"

I want to ensure each deployed service can communicate with other services as needed. To accomplish this, I am using the same compose project name in the deployments. If each service is deployed in another compose project, the name resolution and east/west communication becomes complicated. In the output below, you will see Docker is not too terribly happy to see services declared outside the docker-compose.yml file, this is to be expected.

➜ ~ export COMPOSE_PROJECT_NAME="server"

➜ ~ docker-compose -f influxdb/docker-compose.yml up -d

Building with native build. Learn about native build in Compose here: https://docs.docker.com/go/compose-native-build/

WARNING: Found orphan containers (server_grafana_1, server_gitlab_1, server_redis_1, server_registry_1, server_netbox_1, server_postgres_1) for this project. If you removed or renamed this service in your compose file, you can run this command with the --remove-orphans flag to clean it up.

Starting server_influxdb_1 ... done

➜ ~

I personally like keeping my base NGINX configuration file default and overload what I need with individual .conf files in the conf.d folder. Each override I declare in it’s own file to make it easier for me to identify and make changes. The file paths below are based on the default install location of NGINX. Your install may use different paths, but the concept is the same.

Forces all HTTP traffic to redirect to HTTPS and keeps the requested host intact.

server {

listen 80 default_server;

return 301 https://$host$request_uri;

}

map $http_x_forwarded_proto $thescheme {

default $scheme;

https https;

}

server {

# Inbound requested hostname

server_name influxdb.whitej6.com;

listen 443 ssl;

# Let's Encrypt certificate location

ssl_certificate /etc/letsencrypt/live/whitej6.com/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/whitej6.com/privkey.pem;

client_max_body_size 25m;

proxy_set_header X-Forwarded-Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-Proto $thescheme;

add_header P3P 'CP="ALL DSP COR PSAa PSDa OUR NOR ONL UNI COM NAV"';

# Where to reverse proxy HTTP traffic to

location / {

proxy_pass http://localhost:8086;

}

}

Once the configuration is complete for NGINX, you need to restart the NGINX service. I am using systemctl to manage running system services on the host.

➜ ~ sudo systemctl restart nginx

➜ ~

➜ influxdb echo "curl from outside the docker network"

curl from outside the docker network

➜ influxdb curl https://influxdb.whitej6.com/health

{"checks":[],"message":"ready for queries and writes","name":"influxdb","status":"pass","version":"1.8.4"}%

➜ influxdb

➜ influxdb echo "curl from inside the docker network"

curl from inside the docker network

➜ influxdb docker exec -it server_gitlab_1 sh -c "curl http://influxdb:8086/health"

{"checks":[],"message":"ready for queries and writes","name":"influxdb","status":"pass","version":"1.8.4"%

➜ influxdb

With all the services deployed and verified I have a fully functioning multi-tenanted Docker host securely serving each service over HTTPS with DNS records to improve useability. This has enabled me to move the Grafana and InfluxDB service off the Raspberry Pi hosting my aquarium controller to the Docker host to improve user experience. Hopefully this post helps you in securely deploying services to a Docker host.

Share details about yourself & someone from our team will reach out to you ASAP!