Blog Detail

A prior NTC blog post by Tim Fiola laid out the case that it is actually a company’s culture, not technical prowess, that enables automation to take hold.

This blog will dig a bit deeper into that cultural component and discuss how the culture around automation helps a company derive real value. We will discuss two primary aspects:

- How the Network Engineer’s role changes when adopting an automation first approach

- How an organization could be structured to derive the most value from automation

The Network Engineer’s Role Will Change, Not Disappear

One common misconception that network services organizations share is that Network Engineers are now obsolete and that automation will eliminate their jobs or require them to become developers. This is patently false.

There will always be a need for people who understand the IT infrastructure and network at a technical level.

The truth is that while the Network Engineer’s role will need to change to adapt to an automated environment, the Network Engineer’s knowledge and skills are still very much needed. This blog describes what that means in practice.

Network Engineers Do Not Need to Become Developers

Oftentimes network engineering leadership and Network Engineers themselves assume that Network Engineers need to be completely re-skilled. Repeat with us…. Network Engineers do not NEED to become software developers. In reality, a firm’s automation evolution does not necessarily mean that the Network Engineers will have to become Developers, Network Reliability Engineers, or Site Reliability Engineers. Network and software engineering are their own skill sets and need to be respected as such.

While it is true that there are additional skill sets required to run an automated IT infrastructure, the nuance here comes from the company’s size, capabilities, and ultimate goals. For example, can the company create and nurture the required skill sets in-house, or will it need to take on partners to fulfill some roles required to run an automated infrastructure?

A Network Engineer’s responsibilities in an automated environment don’t require that they become a full-time developer. At a minimum, however, those responsibilities will likely require that the Network/IT Engineer learn design-oriented thinking. Design-oriented thinking means consideration of how to break larger workflows into smaller, more manageable, and repeatable parts. Oftentimes Network Engineers develop this type of thinking when they learn basic Python, some other coding language, or programmatic tool.

Growing your skill set incrementally is an important part of your career path: it is an opportunity to maintain your relevance.

It is important here to draw an explicit distinction between learning a coding language, such as Python, versus becoming a full-time Developer: learning new incremental skills is important in any existing career path. There is no hard requirement to become a Developer, which is an entirely different career path.

Traditional Network Engineering Disciplines WILL Need to Adapt to an Automated Environment

The traditional Network Engineer who enjoys CLI or copy/paste of commands for config changes is going to be disappointed. Those tasks will not be required on any type of scale in an automated environment.

Here are some reasonable day-to-day changes in duties that a Network or IT Engineer can expect on the firm’s automation journey:

- Examine how to automate existing workflows with design-oriented thinking

- Employ design-oriented thinking when designing new workflows

- Become product owners or subject-matter experts (SMEs) for network products/services

- Write prototype scripts and then work with a developer to

- Harden them for production

- Make them scalable

- Design appropriate automated testing

- Work with developers to translate configuration and functional device requirements into configuration templates

- Work with the broader organization to integrate Network/IT Engineering’s automation into a broader automation infrastructure

- Understand how to improve network and IT infrastructure health with automated solutions

- Learn enough Python/Ansible/Git/etc. to understand and trust an automated infrastructure

There will always be a need for the Network Engineer’s knowledge; it’s just that the knowledge will be expressed differently.

Notice that each of the tasks above requires an understanding of how a network operates and the mechanics that take place within a network. This brings us to a very important point: there will always be a need for the Network Engineer’s knowledge; it’s just that the knowledge will be expressed differently.

Cultural changes often meet resistance.

Take a look again at the above statement: “the knowledge will be expressed differently.” When you tell someone that the way they go about their job, which is an aspect of their way of life, is going to change, that is a cultural change. Cultural changes can be difficult and oftentimes meet resistance. This is why it’s important for a firm’s leadership to be aware of, foster, and encourage the changes.

How Should the Organization Be Structured and Managed?

The structure of an organization is perhaps the most important factor that will determine how successful an automation transformation will be.

There are several very important, interrelated factors that fold into this structure.

Trust . . .

. . . Across the Firm

Trust across the organization’s different components will ultimately dictate the scope of its automation.

Imagine a Network Engineer writing a Python script or Ansible playbook to add a VLAN to a network device and interface to make their life easier. Adding a VLAN is a task that the Engineer may do multiple times a day, and the script will allow the Engineer to spend perhaps one minute on that task that may have taken seven minutes prior.

Without trust, automation provides localized value in the silos, but it will fail to deliver strategic value back to the company.

The larger picture here deals with a workflow. It is important to focus on workflows because a workflow is how a firm transforms their resources into value. Workflows typically contain many tasks and localized bottlenecks along the way. It is the sum of these tasks and bottlenecks that determine the overall throughput that the workflow can handle.

In order to realize value from automating a given workflow, the company needs to reduce the time for each task and mitigate the bottlenecks.

In this example, the Engineer is adding a VLAN to a device and interface as part of an end-to-end service activation workflow that includes the internal end users, Network Engineering, Capacity Planning, and Procurement departments.

However, the larger company will not benefit from this localized/siloed automation because using a script to quickly add the VLAN to the device does not have a dramatic impact on the end-to-end workflow. Using the script makes the Engineer’s life easier, but doing so has a minimal impact on increasing the throughput of the end-to-end workflow.

The company at large won’t benefit from siloed automation.

The firm will not benefit unless there is trust and cooperation between groups to automate each task in the complete workflow so that the time to execute the workflow drops and the amount of times the workflow can be executed in a given period increases.

There must be trust and cooperation between groups.

. . . And in the Technology

Another aspect of trust is trust in the technology itself. If the people involved, including the Network/IT Engineers, do not trust the technology, they will not accept it. Understanding not just what the technology does, but how it operates under the hood goes a long way toward building trust in the technology. This article earlier stated that it is likely that a Network Engineer, for example, would need to learn basic Python: building trust in the technology is part of the why.

People won’t trust what they don’t understand.

Process

One of the benefits of starting the automation journey is that it requires in-depth formal process discovery.

Here is a very common example: someone at the company is tasked with automating a given workflow, and so starts to question all the groups involved. Along the way, the person discovers that in step 5 of the workflow, there is a need for a specific IP address to be assigned to a given interface and SOMEHOW the Engineer gets that IP address.

Shadow workflows often pop up when formal workflows are not well-defined; in many instances like this, many people will just take extra steps to get the work done. These extra steps are typically never documented, which means the company is blind to the full workflow process.

Where did this specific IP address come from? In our example scenario, the IP address was not an input into the original provisioning order. After some more digging, it turns out that the person tasked with provisioning the interface in step 5 goes to a spreadsheet that is maintained by some person in another department who happens to know what IP addresses belong on a given device. Until this examination of the workflow happened, that spreadsheet and the information in it were never part of the documented workflow: it was a part of a shadow workflow.

Shadow workflows often pop up when formal workflows are not well-defined. When this happens, people responsible for executing the workflow often take the initiative to find the required information themselves, but those extra steps often don’t get documented.

Automation is not strictly about automating: it is first about understanding, and then automating.

Since automating a workflow, by definition, requires understanding all the steps, it forces the company to get a full understanding of their workflows. Automation is not strictly about automating: it is first about understanding, and then automating.

Reuse

An automated organization needs to be set up to share and reuse code across silo boundaries. For example, a script to get a subnet in a workflow for a new router deployment can also be used in a workflow to deploy a new server farm. Coordinating automation that will deliver real value back to the company requires a central group to plan and coordinate workflow steps and the methodology to implement those steps in each workflow.

Change Control

Change control is perhaps one of the biggest considerations when transitioning to an automated infrastructure. The central question around automating network changes is this: does an automated change require the same process as a traditional change?

The short answer to that question is likely No. The longer answer goes back to culture:

- What does change management really need to assess the risk of an automated change?

- Does an automated change require the same process as a traditional (manual) change?

- Can the company make a new process for automated changes?

- Do the parties trust the technology and automated processes?

- How can they build that trust? (testing, transparency, training, etc.)

Should change management look the same for both manual and automated changes?

All operations resulting in a change should be part of a larger change management strategy that considers:

- The risk associated with a change

- The expected impact of a successful or unsuccessful change

- The tracking and/or auditing of changes

- The communication of change

- A rollout and rollback plan

- The approval workflow and sign-off process

- Scheduling of changes

The prior NTC blog post on culture also covers some other considerations for Human Resources and management.

Wrapping Up

Specific technology references have been conspicuously absent from this blog post. This is because automation is ultimately sustained via a cultural transformation; specific technologies are a secondary consideration. In some cases, it’s about changing what a firm does; in other cases, it’s about changing how the firm goes about doing existing things, like executing workflows. These are cultural changes that need to be fostered and encouraged across the firm, because the changes need to take place across many organizations and levels in the firm.

It is ultimately the cultural realignment within the firm, including the points discussed above, that will determine whether the automation transformation will be successful in the long run.

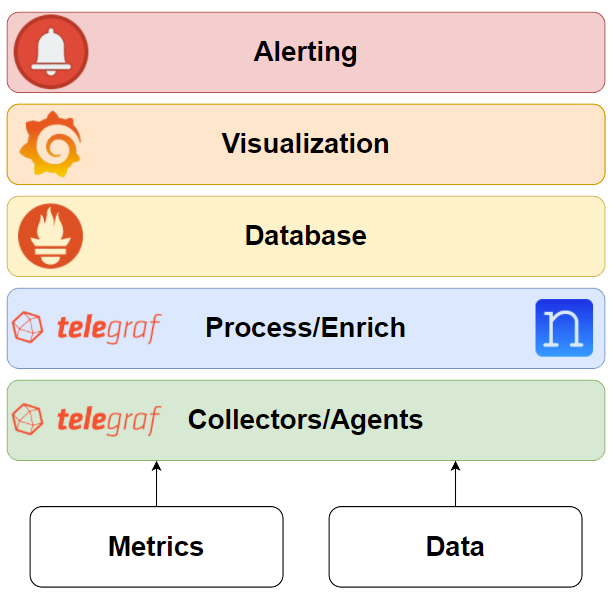

Arguably, we can consider a large portion of the technical part of the automation journey solved: today, firms can select the right technical components from a wide variety of options to fit into their automation architecture and needs, with more options and improvements being added as time goes on. The technology is sound. It is ultimately the cultural realignment within the firm, including the points discussed above, that will determine whether the automation transformation will be successful in the long run.

Thank you, and have a great day!

-Tim Fiola

Tags :

Contact Us to Learn More

Share details about yourself & someone from our team will reach out to you ASAP!