Recently Network to Code open sourced schema-enforcer, and immediately my mind turned to integrating this tool in with CI pipelines. The goal is to have fast, repeatable, and reusable pipelines that ensure the integrity of the data stored in Git repositories. We will be accomplishing repeatability and reusability by packaging schema-enforcer with Docker and publishing to a common Docker registry.

By integrating repositories containing structured data with a CI pipeline that enforces schema you are better able to predict the repeatability of the downstream automation that consumes the structured data. This is critical when using the data as a source of truth for automation to consume. It also helps to react faster to an incorrect schema before this is used by a configuration tool, such as Ansible. Imagine being able to empower other teams to make chages to data repositories and trust the automation is performing the checks an engineer manually does today.

Containers can be a catalyst to speeding up the process of CI execution for the following reasons:

For today’s example I am using my locally hosted GitLab and Docker Registry. This was done to showcase the power of building internal resources that can be easily integrated with on-premise solutions. This example could easily be adapted to run in GitHub & Travis CI with the same level of effectiveness and speed of execution.

Click Here for documentation on Dockerfile construction and docker build commands. The Dockerfile is starting with python:3.8 as a base. We then set the working directory, install schema-enforcer, and lastly setting the default entrypoint and command for the container image.

FROM python:3.8

WORKDIR /usr/src/app

RUN python -m pip install schema-enforcer

ENTRYPOINT ["schema-enforcer"]

CMD ["validate", "--show-pass"]

Click Here for documentation on hosting a private docker registry. If using Docker Hub, the image tag would change to <namespace>/<container name>:tag. If I was to push this to my personal Docker Hub namespace, the image would be whitej6/schema-enforcer:latest.

docker build -t registry.whitej6.com/ntc/docker/schema-enforcer:latest .

docker push registry.whitej6.com/ntc/docker/schema-enforcer:latest

For the first use case, we are starting with example1 in the schema-enforcer repository located here. We then add a docker-compose.yml, where we mount in the full project repo into the previously built container and create a pipeline with two stages in .gitlab-ci.yml, which is triggered on every commit.

➜ schema-example git:(master) ✗ tree -a -I '.git'

.

├── .gitlab-ci.yml

├── chi-beijing-rt1

│ ├── dns.yml # This will be the offending file in the failing CI pipeline.

│ └── syslog.yml

├── docker-compose.yml

├── eng-london-rt1

│ ├── dns.yml

│ └── ntp.yml

└── schema

└── schemas

├── dns.yml # This will be the schema definition that triggers in the failure.

├── ntp.yml

└── syslog.yml

4 directories, 9 files

Click Here for documentation on docker-compose and structuring the docker-compose.yml file. We are defining a single service called schema that uses the image we just publish to the Docker registry and are mounting in the current working directory of the pipeline execution into the container at /usr/scr/app. We are using the default entrypoint and cmd specified in the Dockerfile as schema-enforcer validate --show-pass but this could be overwritten in the service definition. For instance, if we would like to enable the strict flag, we would add command: ['validate', '--show-pass', '--strict'] inside the schema service. Keep in mind the command attribute of a service overwrites the CMD directive in the Dockerfile.

---

version: "3.8"

services:

schema:

# Uncomment the next line to enable strict on schema-enforcer

# command: ['validate', '--show-pass', '--strict']

image: registry.whitej6.com/ntc/docker/schema-enforcer:latest

volumes:

- ./:/usr/src/app/

Click Here for documentation on structuring the .gitlab-ci.yml file. We are defining two stages in the pipeline, and each stage has one job. The first stage ensures we have the most up to date container image for schema-enforcer and next we run schema service from the docker-compose.yml file. By specifiying --exit-code-from schema we are passing the exit code from the schema to the docker-compose command. The commands specified in the script are used to determine whether the job runs successfully. If the schema service returns a non-zero exit code, the job and pipeline will be marked as failed. The second stage ensures we are good tenants of docker and clean up after ourselves, docker-compose down will ensure we remove any containers or networks associated with this project.

---

stages:

- test

- clean

test:

stage: test

script:

- docker-compose pull

- docker-compose up --exit-code-from schema schema

clean:

stage: clean

script:

- docker-compose down || true

when: always

In this example chi-beijing-rt1/dns.yml has a boolean value instead of an IPv4 address as specified in the schema/schemas/dns.yml. As you can see, the container returned a non-zero exit code, failing the pipeline and blocking the merge into a protected branch.

# jsonschema: schemas/dns_servers

---

dns_servers:

- address: true # This is a boolean value and we are expecting a string value in an IPv4 format

- address: "10.2.2.2"

---

$schema: "http://json-schema.org/draft-07/schema#"

$id: "schemas/dns_servers"

description: "DNS Server Configuration schema."

type: "object"

properties:

dns_servers:

type: "array"

items:

type: "object"

properties:

name:

type: "string"

address: # This is the specific property that will be used in the failed example.

type: "string"

format: "ipv4"

vrf:

type: "string"

required:

- "address"

uniqueItems: true

required:

- "dns_servers"

We see exactly which file and attribute fails the pipeline along with the runtime of the pipeline in seconds.

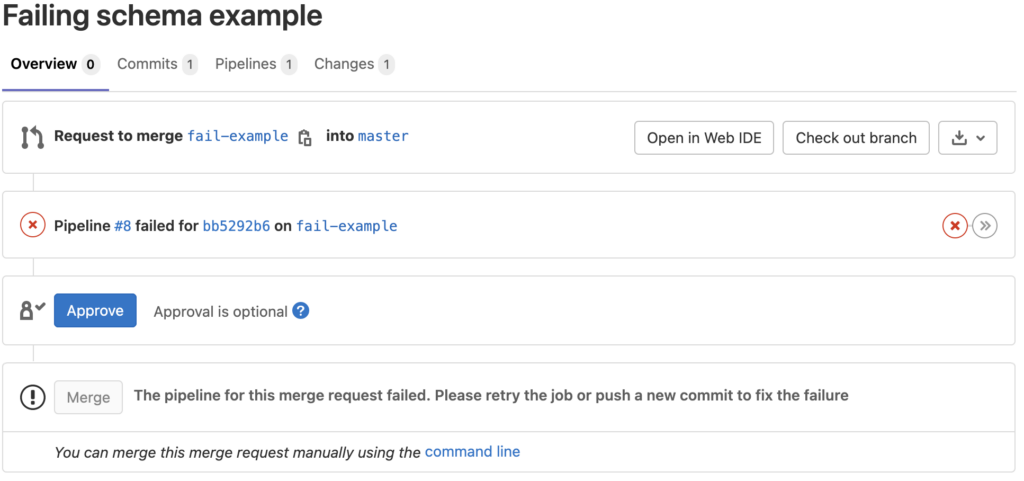

When sourcing from a branch with a failing pipeline, GitLab has the ability to block merging until the pipeline succeeds. By having the pipeline triggered on each commit we can resolve the issue on the next commit, which then triggers a new pipeline. Once the issue has been resolved, we will see the Merge button is no longer greyed out and can be merged into the target branch.

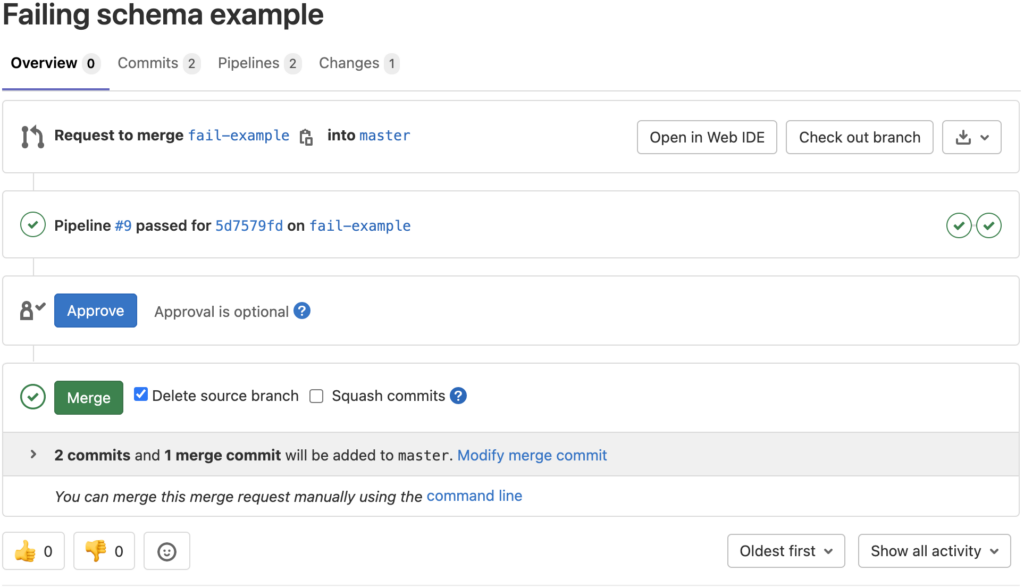

Now the previous error has been corrected and a new commit has been made on the same branch. GitLab has then rerun the same pipeline with the new commit and upon passing the branch can be merged into the protected branch.

# jsonschema: schemas/dns_servers

---

dns_servers:

- address: "10.2.2.3" # This is the value that has been updated to align with the schema definition.

- address: "10.2.2.2"

With the issue resolved and committed, we now see the previously offending file is passing the pipeline.

The merge request is now able to be merged into the target branch.

As a network engineer by trade that has come into automation, it at times has been difficult to trust the machine that was building the machine let alone trusting others eager to collaborate. Building safe guards for schema into my early pipelines would have saved me a tremendous amount of time and headache.

Friends don't let friends merge bad data.

Share details about yourself & someone from our team will reach out to you ASAP!